Welcome to a world where cutting-edge technology meets unrivaled graphical prowess. In the realm of gaming and multimedia, the pursuit of the ultimate visual experience has led to the creation of extraordinary masterpieces: the most expensive graphics cards.

These magnificent pieces of engineering redefine the boundaries of performance and elevate gaming to unprecedented heights.

Are you ready to journey into a realm where frame rates soar, textures come alive, and realism transcends imagination?💁

Join us as we delve into the captivating world of the most expensive graphics card, where technological marvels coalesce with artistic brilliance to ignite your senses like never before.

In this article, we will unravel the enigmatic allure surrounding these remarkable pieces of hardware. From their breathtaking specifications to the unparalleled experiences they deliver, we’ll leave no stone unturned.

Whether you’re an avid gamer, a content creator, or someone with an insatiable appetite for groundbreaking innovation, this exploration is tailored to captivate your interest and quench your thirst for knowledge.

Buckle up and prepare to be astounded as we unveil the epitome of graphical supremacy.

Let’s begin our expedition into the dazzling world of the most expensive graphics cards, where dreams become a reality and possibilities become boundless.

Most Expensive Graphics Cards That Will Break the Bank

1. NVIDIA A100 (Price –Typically $16,250–$16,950)

Introducing the pinnacle of graphics card technology: the NVIDIA Tesla A100 Ampere 40 GB Graphics Card – PCIe 4.0 – Dual Slot.

This extraordinary masterpiece redefines what it means to be at the cutting edge of performance, making it the most expensive graphics card on the market today.

When it comes to unleashing the true potential of your computer, the NVIDIA Tesla A100 stands head and shoulders above the rest.

With its unparalleled power and remarkable capabilities, this graphics card sets new standards for visual excellence and computational performance.

No expense has been spared in creating a product that pushes boundaries, making it the ultimate choice for discerning professionals and enthusiasts.

One of the standout features of the Tesla A100 is its massive 40 GB of high-speed memory. This extraordinary capacity allows for unparalleled data handling, enabling you to tackle the most demanding workloads and complex simulations effortlessly.

Whether you’re rendering mind-bogglingly realistic 3D graphics, conducting intricate scientific calculations, or training cutting-edge machine learning models, the Tesla A100 will deliver unprecedented performance, ensuring your projects are completed efficiently.

Moreover, the Tesla A100 takes full advantage of PCIe 4.0 technology, offering lightning-fast data transfer speeds that break through the limits of its predecessors.

This means that you can achieve an unprecedented level of responsiveness, minimizing latency and maximizing productivity.

With the Tesla A100, you’ll experience a seamless workflow, allowing you to focus on your creative endeavors without being hindered by hardware constraints.

Beyond its technical prowess, the Tesla A100 is designed with precision and sophistication. Its dual-slot form factor ensures compatibility with a wide range of systems, allowing you to integrate this powerhouse into your existing setup seamlessly.

The meticulous engineering and attention to detail that have gone into its design are a testament to NVIDIA’s commitment to delivering nothing but the best.

By investing in the NVIDIA Tesla A100 Ampere 40 GB Graphics Card, you’re not just acquiring a graphics card but investing in a future where limitless possibilities become a reality.

While it may bear the price tag of the most expensive graphics card on the market, its unparalleled performance, groundbreaking features, and uncompromising quality make it worth every penny.

So, whether you’re a professional looking to revolutionize your workflow or an avid enthusiast seeking the ultimate gaming experience, the Tesla A100 is the definitive choice.

Step into a realm where imagination knows no bounds, where performance is unmatched, and where the most expensive graphics card on the market sets the standard for excellence.

Specifications:-

Here’s a table outlining the specifications of the NVIDIA Tesla A100 Ampere 40 GB Graphics Card – PCIe 4.0 – Dual Slot:-

| Specification | Details |

|---|---|

| Graphics Processing Unit (GPU) | NVIDIA Ampere Architecture |

| CUDA Cores | 6,912 |

| Memory Size | 40 GB GDDR6X |

| Memory Bandwidth | 1.6 TB/s |

| Memory Interface | 512-bit |

| System Interface | PCIe 4.0 x16 |

| Power Consumption | 400W |

| Cooling System | Dual-Slot Passive Cooling |

| Form Factor | Dual Slot |

| Maximum Resolution | 7680 x 4320 |

| Display Connectors | None (Designed for Data Center Use) |

| Compute Performance (FP32) | 19.5 TFLOPS |

| Compute Performance (FP64) | 9.7 TFLOPS |

| Tensor Performance (FP16) | 312 TFLOPS |

| Deep Learning Performance (TF32) | 156 TFLOPS |

| NVIDIA CUDA® Cores | Yes |

| NVIDIA Tensor Cores | Yes |

| NVIDIA NVLink™ | Yes (2-way, up to 80 GB/s) |

| DirectX Support | 12 Ultimate |

| OpenGL Support | 4.6 |

| Vulkan API Support | Yes |

| Multi-GPU Technology | NVIDIA NVLink™ |

| Supported Operating Systems | Windows, Linux |

| Dimensions | 267 mm x 111.15 mm x 39.9 mm (L x H x W) |

2. NVIDIA Quadro RTX 8000 (Price – Typically $5,706–$8,130)

Are you ready to experience the pinnacle of graphics performance? Look no further than the NVIDIA Quadro RTX 8000, the second most expensive graphics card on the market.

This powerhouse of technological innovation sets new standards for visual excellence and pushes the boundaries of what is possible in computer graphics.

When it comes to rendering lifelike images, creating breathtaking virtual reality experiences, or handling complex simulations with unrivaled precision, the Quadro RTX 8000 reigns supreme.

Equipped with groundbreaking features and unmatched computational power, this graphics card is designed to elevate your creative work, revolutionize your gaming experience, and transform how you perceive graphics performance.

At the heart of the Quadro RTX 8000 lies NVIDIA’s Turing architecture, a technological marvel that delivers real-time ray tracing, artificial intelligence acceleration, and advanced shading capabilities.

This means you can witness stunningly realistic lighting, shadows, and reflections in your favorite games or achieve unparalleled accuracy in your professional workflows.

With the Quadro RTX 8000, you’ll be immersed in a world where virtual reality seamlessly blends with reality itself.

But it’s not just about the jaw-dropping visuals. The Quadro RTX 8000 also offers exceptional computational power, enabling you to tackle the most demanding tasks effortlessly.

Whether you’re engaged in deep learning, scientific research, or architectural design, this graphics card will empower you to achieve breakthrough results with its 48GB of ultra-fast GDDR6 memory and 72 Turing RT cores.

Furthermore, the Quadro RTX 8000 comes with NVIDIA NVLink, a revolutionary technology that allows you to combine multiple graphics cards for even greater performance.

With NVLink’s scalability and unmatched bandwidth, you can unlock the full potential of your creative endeavors and witness unparalleled productivity gains.

Investing in the Quadro RTX 8000 means investing in your future. It’s not just a graphics card; it’s a gateway to infinite possibilities.

Whether you’re an architecture, engineering, or film production professional or a passionate gamer who demands the best, this graphics card will elevate your work and play to unimaginable heights.

Don’t settle for anything less than the most expensive graphics card. Unleash the full potential of your creativity, and let the NVIDIA Quadro RTX 8000 redefine your expectations.

Embrace the pinnacle of graphics performance and witness a new era of visual excellence.

Specifications:-

Here’s a table describing the specifications of the NVIDIA Quadro RTX 8000:-

| Specification | Details |

|---|---|

| GPU Architecture | Turing |

| CUDA Cores | 4608 |

| Tensor Cores | 576 |

| RT Cores | 72 |

| GPU Memory | 48 GB GDDR6 |

| Memory Interface | 384-bit |

| Memory Bandwidth | 672 GB/s |

| Max Power Consumption | 295 W |

| Maximum Displays | 4 |

| Display Connectors | DP 1.4 (4), VirtualLink (1) |

| Max Resolution | 7680 x 4320 @ 60Hz |

| DirectX Support | 12.1 |

| OpenGL Support | 4.6 |

| Vulkan Support | Yes |

| NVIDIA NVLink Support | Yes |

| PCI Express Interface | Gen 3 x16 |

| Form Factor | Dual-Slot, Full-Height |

| Cooling Solution | Active |

| Recommended Power Supply | 650 W |

| Supported Technologies | VR Ready, G-SYNC, HDCP 2.2, 3D Vision, Ansel, NVIDIA GPU Boost, NVIDIA NVLink, NVIDIA SLI, Vulkan API, DirectX 12, OpenGL 4.6 |

3. NVIDIA Titan RTX

If you need to know how the graphics card market behaves now, then know that the NVIDIA Titan RTX card is one of the most patronized.

This high-performance card deserves all the attention it gets, and it would require many innovative efforts to beat it in terms of the outputs.

Its specifications are peculiar and hard to find- especially in many regular products.

Firstly, the card has a fine aluminum case that is well crafted to create special appeal. Note that although the aluminum material, the casing is still relatively light and does not add extra weight to the card.

While it is easily an ideal graphics card for big-time gamers, it seems to have been primarily developed for other purposes like content creation and data science. Irrespective of what you’ll do with it, note that this product is a versatile graphics card for all purposes.

The card is known for its remarkable speed, ingenious NVIDIA turning structure and a massive 24GB GDDR6 memory.

It has a high memory bandwidth of about 672GB per second and higher clock rates. For instance, it has a memory speed of about 7,000 MHz. Hence, you can conveniently play even the bulkiest games without experiencing the slightest image display lag.

Another impressive feature of the Titan RTX that the model to buy is its 13-blade fan. With this provision, you don’t have to worry about overheating on your graphics card.

The fan cools every module within the card in no time, so you might not notice how much heat is produced. However, this cooling process is also aided by the vapor chamber on the card, which effectively distributes the built-up heat.

Other important components of the Titan RTX are its power array- which has 13 phases, HDMI, and USB Type-C ports, among other connectivity ports.

Specifications:-

Here’s a table showcasing the specifications of the NVIDIA Titan RTX graphics card:-

| Specification | Description |

|---|---|

| Architecture | Turing |

| GPU | NVIDIA Titan RTX |

| CUDA Cores | 4,608 |

| Tensor Cores | 576 |

| RT Cores | 72 |

| GPU Boost Clock | 1,770 MHz |

| Memory | 24 GB GDDR6 |

| Memory Speed | 14 Gbps |

| Memory Interface | 384-bit |

| Memory Bandwidth | 672 GB/s |

| Max Resolution | 7680×4320 @ 60Hz |

| Display Connectors | 3x DisplayPort 1.4, 1x HDMI 2.0b, 1x VirtualLink (USB Type-C) |

| Power Connectors | 2x 8-pin |

| TDP (Thermal Design Power) | 280W |

| Recommended Power Supply | 650W |

| Dimensions | 267 mm (length) x 111 mm (height) |

| Cooling | Dual-Fan |

| DirectX Support | DirectX 12 Ultimate |

| OpenGL Support | 4.6 |

| Vulkan API Support | Yes |

| NVIDIA CUDA Support | Yes |

| NVIDIA NVLink Support | Yes |

| VR Ready | Yes |

| SLI Support | Yes |

| Operating System Compatibility | Windows 10, Linux |

| Warranty | 3 years |

Pros:-

- High performance and speed

- It is a versatile graphics card suitable for most types of graphics computing and display

- It has various connectivity ports

- The 13-blade fan cools the card and prevents overheating

Cons:-

- The Titan RTX card is not so lightweight and portable

4. NVIDIA TITAN V

The NVIDIA Titan V has a suggested retail price of $2,999 and is the most expensive graphics card after NVIDIA Titan RTX. The store and the card’s stock, however, may affect the real cost of the card.

For professionals and fans who seek the finest performance and features, NVIDIA created the Titan V, a high-end graphics card.

It is driven by the cutting-edge NVIDIA Volta architecture, which offers a notable performance boost over graphics cards from earlier generations.

The Titan V can handle even the most demanding workloads, such as 3D rendering, scientific simulations, and machine learning, thanks to its 12 GB of HBM2 memory. It also boasts a massive 5120 CUDA core count, which enables it to handle challenging graphics and computation jobs easily.

The Titan V has various cutting-edge features that make it perfect for professional use in addition to its amazing performance.

For instance, it supports ECC memory, which aids in ensuring the quality and integrity of data, and features a dedicated Tensor Core that is intended exclusively for machine learning applications.

The NVIDIA Titan V is a superb graphics card that provides unmatched performance and capabilities for professionals and hobbyists who require the best graphics processing.

The Titan V is something to consider if you’re looking for a top-tier graphics card that can handle any workload you throw at it.

Specifications:-

Here’s a table highlighting the specifications of the NVIDIA TITAN V graphics card:-

| Specification | Details |

|---|---|

| GPU Architecture | Volta |

| CUDA Cores | 5120 |

| Tensor Cores | 640 |

| Core Clock | 1200 MHz |

| Boost Clock | 1455 MHz |

| Memory | 12 GB HBM2 |

| Memory Bandwidth | 653 GB/s |

| Memory Interface | 3072-bit |

| Memory Speed | 1.7 Gbps |

| TDP (Thermal Design Power) | 250 W |

| DirectX Support | 12.1 |

| OpenGL Support | 4.6 |

| Vulkan Support | Yes |

| Max Resolution | 7680 x 4320 |

| Max Monitors Supported | 4 |

| VR Ready | Yes |

| Dimensions | 4.4″ (H) x 10.5″ (L) |

5. NVIDIA GeForce RTX 3090 Founders Edition Graphics Card

One of the more expensive options on the market might be the NVIDIA GeForce RTX 3090 Founders Edition graphics card.

The manufacturer, model, and particular features and capabilities are only a few variables that can significantly impact the pricing of graphics cards.

Generally, graphics cards with more performance and modern technologies are more expensive.

With top-tier features like its Ampere architecture and 24GB of GDDR6X memory, the NVIDIA GeForce RTX 3090 Founders Edition is a high-end graphics card, which would explain why it costs more.

Before purchasing, it is wise to research and evaluate offers from various merchants. This can assist you in locating the finest offer and guarantee that you receive the best value for your money.

Specifications:-

Here’s a table outlining the specifications of the NVIDIA GeForce RTX 3090:-

| Specification | Details |

|---|---|

| Architecture | Ampere |

| CUDA Cores | 10496 |

| Boost Clock | 1.70 GHz |

| Memory | 24 GB GDDR6X |

| Memory Speed | 19.5 Gbps |

| Memory Interface | 384-bit |

| Memory Bandwidth | 936 GB/s |

| RT Cores | 82 |

| Tensor Cores | 328 |

| TDP (Power) | 350W |

| PCIe Interface | PCIe 4.0 |

| Display Outputs | 3x DisplayPort 1.4a, 1x HDMI 2.1 |

| Maximum Resolution | 7680×4320 |

| Recommended PSU | 750W |

| Dimensions | 12.3″ x 5.4″ x 3-Slot |

| Release Date | September 2020 |

6. XFX Speedster MERC319 AMD Radeon RX 6900 XT

Do you need a powerful graphics card to play games or create content? The AMD Radeon RX 6900 XT by XFX Speedster is your best option.

This robust graphics card has capabilities that will boost your computer’s performance to new heights.

The XFX Speedster MERC319 AMD Radeon RX 6900 XT can deliver fluid gameplay even at high resolutions and settings thanks to its AMD RDNA 2 architecture and 16GB of GDDR6 RAM.

This graphics card can handle any task you throw at it, including editing high-resolution videos, rendering 3D graphics, and playing the newest games.

The XFX Speedster MERC319 AMD Radeon RX 6900 XT has a USB-C port, HDMI 2.1, DisplayPort, and other connectivity options for simple connection to various monitors addition to its amazing specifications.

Using this graphics card, your favorite entertainment will show you in breathtaking detail.

Don’t just believe what we say, though. Check out the many favorable evaluations and awards that users and reviewers have given this graphics card.

You’ll receive one of the top graphics cards on the market with the XFX Speedster MERC319 AMD Radeon RX 6900 XT.

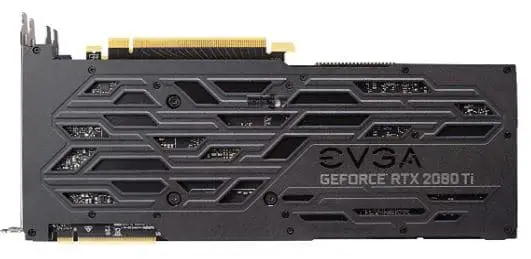

Check Price on Amazon7. EVGA GeForce RTX 2080Ti

When manufacturing computer hardware that stands the test of time, the EVGA company has always been one of the best.

Hence, the performance and durability of this graphics card do not come to us as so much of a surprise.

You’ll see that this card’s features and functions have been carefully designed to beat the requirements of even the most demanding game graphics.

However, note that this graphics card is also one of the most versatile on our list. Hence, it works well for other high graphic processing and rendering needs besides gaming.

This product’s performance and outputs notably show the ingenuity and innovative tendencies of the manufacturer.

Note that the EVGA GeForce RTX 2080Ti graphics card adopts NVIDIA’s Turing™. This is done not to compromise the card’s speed, realism, and power efficiency.

The card has a real boost clock rate of about 1545 MHz and runs with an 11GB GDDR6 memory feature.

It is compatible with a relatively wider range of computing device models. Hence, this allows you to switch it across multiple devices as needed.

The EVGA GeForce 2080Ti, graphics card model has a quieter cooling system. Its HDB fans cool the processing unit without the characteristic noise observed with even some of the best GPUs.

Another important feature of this model is its RGB type of LED, which can be adjusted based on the user’s lighting preferences.

As you’ll find in most other processing units, this product also provides a ray tracing feature for enhanced cutting-edge game graphics.

Pros:-

- Good overclocking abilities

- Compatible with a wider range of devices

- It comes with a quieter cooling system that works

- RGB LED lighting that is adjustable by the user

Cons:-

- The processor has a relatively poorer design

8. ASUS ROG STRIX GeForce RTX 2080TI

This GPU has what it takes to run the heaviest games at an impressive 4k resolution. With its 4k resolutions, users can enjoy the games more- since they would feel more immersed in the actions.

This graphics card has a fascinating, rugged look, suggesting how much you can put this beast to work.

The ROG STRIX GeForce RTX 2080Ti does the toughest of image processing and rendering in no time, and we understand if you start wishing you had bought it a long time ago.

It comes with a 2080Ti chipset which is a plus on its own, while the other feature additions are the things that make it even better.

Part of what facilitates its performance is its NVIDIA Turing™ architecture, which can output a speed of up to six times more than what is obtainable in some previous GPU versions.

Its Direct12X infrastructure, on the other hand, facilitates good image rendering, such that you get improved lifelike image output quality all the time.

Now, to increase the cooling process inside the card, the fan hub is slightly reduced- compared to its size in models before.

Instead, the cooling blades in this graphics card are longer and more positioned to circulate cooling air through the hardware effectively. An anti-dust framework also prevents dust from getting to the fam’s internals.

Note that the ASUS ROG STRIX 2080Ti has 11GB GDDR6 RAM. The card can support four monitors using a 1.4 display port. It has certain RGB lighting features, too, as well as several connectivity ports.

Pros:-

- The graphics card produces very reduced noise

- 4k resolution is a huge plus for some categories of users

- Good cooling system with an anti-dust module

- The graphics card is still one of the most energy-efficient at this time

Cons:-

- The RGB lighting type is quite not recent

9. NVIDIA GEFORCE RTX 2080 Ti Founders Edition

This is one of the foremost NVIDIA products with the Turing™ architecture to be released. You’ll love almost everything about this model, as it comes with the highest levels of sophistication you can yet wish for.

The GPU has 11GB GDDR6 memory, ensuring optimum gaming sessions without interruptions and lag.

Asides from this, its 7680×4320 digital resolution is not a joke. The NVIDIA GeForce RTX 2080Ti founders edition is a graphics card allowing you to multitask well on your laptop or desktop. It is also one of the most reliable and durable products.

The overclocking provisions on this card are superb, as it works with a 13-phase power supply that does the magic.

Aside from this, a vapor chamber and a 13-blade fan ensure that the unit is effectively cooled occasionally. This leaves few or no chances for users to discover overheating on the GPU.

The other important graphics card characteristics include its boost clock speed rate of 1635 MHz and the 616GB per second memory bandwidth.

You can connect and synchronize several GPUs to this one using the card’s NVIDIA SLI transmission component. The RTX 2080Ti founder’s edition is remarkably quiet when in use, and it’s still one of your best shots- if you importantly need a graphics card that doesn’t make noise.

The graphics processor unit has connectors like the display, HDMI, and Type-C USB ports. Note that its average weight is about 4.5 pounds. It has a 352 bits memory width and a massive 14,000 MHz memory speed rate.

Recently, several active users think it’s probably the most innovative GPU from the NVIDIA company. However, we’re sure it’s never close to being a waste of money if you decide to stick with this giant of a GPU.

Pros:-

- It outputs excellent performance and speed for all time

- It has a remarkable build and quality designs that cannot go unnoticed

- Great overclocking abilities with a cooling system that is up to the task

- Allows 4k resolution gaming

Cons:-

- It does not support all game types at 60 frame rates per second

10. MSI GeForce RTX 2070 Graphics Card

This gaming graphics card may not have as many features as other products on this list, but it still competes well with the best.

The designs are appealing, and you cannot but love how it handles your graphics. It’s one of those graphics cards that can be used for casual and professional graphics rendering purposes.

Now, note that this card is powered by the NVIDIA Pascal™ GPU architecture, which affords you all the benefits of the innovation. There is the DirectX 12 feature that also facilitates the kind of speed and smoothness that every gamer enjoys.

The graphics card is also run on GDDR5 memory of 8GB, connected to a 256-bit interface. The core clock rate is 1706 MHz, which is not bad for playing the most common games.

Another feature that enhances its overall output is the NVIDIA Gameworks™ module. This module facilitates quality imaging and smooth gameplay. It also allows you to capture exciting 360 degrees of images. The MSI GeForce RTX 2070 graphics card supports 4k imaging and is VR-ready.

The card has three DisplayPort, one HDMI port, and dual-link DVI-D connectivity.

Pros:-

- The graphics card performs well and effectively handles the most common games.

- It has an appealing build and design.

- It comes with the NVIDIA Pascal GPU architecture and all the framework benefits.

- It supports 4k resolution and imaging

Cons:-

- It may not be the best graphics card for some high-end graphics processing and image rendering.

11. EVGA GeForce GTX 1080 FTW Graphics Card

This is another EVGA-modeled graphics card that is taking the GPU markets by surprise. The card specifically aims at revamping people’s experiences when it comes to the use of video cards.

The processor type to expect on the unit is the Pascal™ framework by NVIDIA. This important brings an exciting and immersive gaming experience for its users.

It has a great design, with its parts made of relatively more durable materials. This huge factor has helped influence the model’s sales increase.

The card has 11GB GDDR5X RAM, with a real base clock and real boost clock rates at 1556 MHz and 1670 MHz, respectively. Although its bandwidth is not as huge as on other top graphics cards, it still has a good 484.4 gigabytes per second bandwidth.

One of its most important features is its AXC 3.0 efficient cooling infrastructure. This feature includes smaller but swifter fan blades and a double ball bearing.

All of these make the GPU require less energy while positively impacting its parts’ durability.

Another important function of the EVGA GTX FTW is how it optimally directs airflow between its parts, thus achieving stability of all the components within the card. This process is efficient because the 9 EVGA iCX sensor additions somewhat control it.

It comes with a 7680×4320 resolution which is obtainable on only a few top graphics card models. You’ll find its secondary BIOS feature useful, as it allows you to customize BIOSes safely without the risk of damaging the card.

Pros:-

- The graphics card offers users real value for money

- It has a remarkable performance that existing users love

- A more efficient cooling system- thanks to the nine sensor additions

- It provides a higher graphics resolution display.

Cons:-

- Its bandwidth is not the highest you can get on the best graphics card

12. NVIDIA QUADRO P6000 Graphics Card

Although many other best graphics cards may be used for professional gaming and game development purposes, this card meets the requirements of an all-purpose graphics card specifically.

It does not disappoint, too, as it would reliably provide all the graphics processing power you need.

The graphics card is designed for persons with higher graphics processing and rendering needs. This means you’ll need to consider it and see if you need to invest in this high-value machine.

However, if you’ve decided to hit the store to get this product, you’re in for something remarkable like you’ve never seen on a GPU.

The graphics card is built on the NVIDIA Pascal™ architecture. It has a massive 24GB GDDR5 RAM, which executes every task easily.

This is one of the few graphics cards on the market that has significantly solved the issues associated with graphics visualization. The output graphics are more real this time, giving users a more immersive and pleasant experience each time they use their computers.

It comes with a four-output display port and can effectively support up to 4 monitors per time. Asides from this, there is also a DV-I and a Stereo display connector. The base clock and a boost clock of the card are fixed at 1,417 MHz and 1,530 MHz, respectively.

However, the peak bandwidth on the card does not exceed 432 gigabytes per second. This machine lets you enjoy your games at 60 fps without fuss.

Pros:-

- Top-notch performance that makes it worth the money

- Relatively lightweight and portable graphics card

- Provides great FPS rates on all types of games

- It has a beautiful and durable design

Cons:-

- It is relatively too expensive

13. ASUS GTX Titan Z Graphics Card

This is another great performer on the graphics card’s honorable mentions list. The ASUS GTX Titan Z combines all the aesthetic appeal and remarkable functionality in one box.

Considering its relatively small size and portability, it is probably a graphics card for someone on the go. You can easily slide it into your bag and screw it into your PCle slot when it’s time to use it.

The card boasts 12 gigabytes of GDDR5 RAM and two processing units. It is one of the fastest GPUs on GDDR5 memory and is mounted on a single PCB.

Note that a few prospective users have raised concerns about the effects of the enormous heat that would be generated because there are two processing units on board.

However, note that the card has a three-cooler chamber framework, efficiently handling the heat. According to the design, each processing unit has a relatively lower clock rate of 705 MHz at best.

By all the standards, only a few graphics processors are close to being a competitor for this video card.

Another good quality of this graphics processor is its ability to take every game you throw. It is hinted to be the processor for higher graphics processing requirements such as scientific data creation, high-end engineering, machine learning, and so on.

Lastly, it also comes with DVI and HDMI connectivity ports.

Pros:-

- Versatility at its best; the graphics card works for all graphics processing

- It comes with two processing units which facilitate its high performance

- An enormous cooling chamber that effectively removes the built-up heat

- Made of strong, durable parts

Cons:-

- The graphics card has an average weight of 6 pounds which is relatively heavier.

Most Expensive Graphics Card For Gaming

Here’s a table showcasing some of the most expensive graphics cards for gaming:-

| Graphics Card Model | Manufacturer | Price (USD) |

|---|---|---|

| NVIDIA GeForce RTX 3090 | NVIDIA | $1,499 |

| NVIDIA Titan RTX | NVIDIA | $2,499 |

| NVIDIA GeForce RTX 3080 Ti | NVIDIA | $1,199 |

| AMD Radeon RX 6900 XT | AMD | $999 |

| ASUS ROG Strix GeForce RTX 3090 | ASUS | $2,199 |

| MSI GeForce RTX 3090 Gaming X Trio | MSI | $1,999 |

| EVGA GeForce RTX 3090 FTW3 Ultra Gaming | EVGA | $1,999 |

| Gigabyte AORUS GeForce RTX 3080 Xtreme | Gigabyte | $1,799 |

| Sapphire Radeon RX 6900 XT Nitro+ SE | Sapphire | $1,599 |

| ZOTAC Gaming GeForce RTX 3080 Trinity | ZOTAC | $1,199 |

Nvidia’s most expensive graphics card

Here’s a table showcasing some of Nvidia’s most expensive graphics:-

|

Graphics Card |

Release Year |

Memory |

Memory Type |

CUDA Cores |

Boost Clock |

Price (USD) |

|

NVIDIA Quadro RTX 8000 |

2018 |

48GB |

GDDR6 |

4,608 |

1,770MHZ |

(Typically $5,706–$8,130) |

|

Nvidia Titan RTX |

2018 |

24 GB |

GDDR6 |

4,608 |

1,770 MHz |

$2,499 |

|

Nvidia GeForce RTX 3090 |

2020 |

24 GB |

GDDR6X |

10,496 |

1,695 MHz |

$1,499 |

|

Nvidia GeForce RTX 3080 |

2020 |

10 GB |

GDDR6X |

8,704 |

1,710 MHz |

$699 |

|

Nvidia GeForce RTX 3070 |

2020 |

8 GB |

GDDR6 |

5,888 |

1,725 MHz |

$499 |

|

Nvidia GeForce RTX 3060 |

2021 |

12 GB |

GDDR6 |

3,584 |

1,780 MHz |

$329 |

Update:- NVIDIA Tesla A100 is now ranking at the top of this list.

📚FAQ’s

Which is the most expensive graphics card?

The most expensive graphics card currently available is the NVIDIA Tesla A100 (Price –Typically $16,250–$16,950), renowned for its cutting-edge performance and advanced features.

Is GTX or RTX more expensive?

Generally, RTX graphics cards are more expensive than their GTX counterparts. This is primarily due to the inclusion of real-time ray tracing technology and other advanced features in the RTX series.

Is GTX better than RTX?

The comparison between GTX and RTX graphics cards depends on specific requirements.

While GTX cards are generally more affordable, RTX cards offer additional features like real-time ray tracing and DLSS, which can significantly enhance graphics quality and performance in supported games.

Here’s a table comparing GTX and RTX graphics cards:-

Here’s a simple table comparing GTX and RTX graphics cards:-

| Features | GTX Series | RTX Series |

|---|---|---|

| Architecture | Pascal, Turing, Ampere (in some models) | Turing, Ampere |

| Ray Tracing | Limited or no ray tracing support | Dedicated ray tracing cores |

| Tensor Cores | Absent or limited presence (in some newer models) | Dedicated tensor cores for AI-based tasks |

| Performance | Excellent gaming performance | Excellent gaming performance, enhanced by ray tracing and AI |

| DLSS (Deep Learning Super Sampling) | Not available or limited support | DLSS 2.0 and newer versions for improved image quality |

| VR Performance | Good VR performance | Enhanced VR performance with features like Variable Rate Shading (VRS) |

| Price Range | Generally more affordable compared to RTX models | Generally more expensive due to added features and capabilities |

| Gaming Future-proofing | May lack certain advanced features | Supports newer technologies like ray tracing and DLSS for future games |

| Professional Workloads | Limited support for professional applications | Enhanced support for professional applications, such as real-time rendering |

| Power Consumption | Generally lower power consumption | May have higher power requirements due to advanced features |

Is RTX or GTX cheaper?

In general, GTX graphics cards are more affordable than their RTX counterparts. However, it’s important to consider factors such as model, specifications, and market fluctuations that can influence the pricing of individual graphics cards.

Can GTX graphics cards do ray tracing?

No, GTX graphics cards have no dedicated hardware for real-time ray tracing. Only RTX series GPUs have specialized RT (Ray Tracing) cores that enable efficient ray tracing calculations and improve visual effects in compatible games.

What does RTX stand for?

RTX stands for “Ray Tracing eXtension.” NVIDIA introduced the RTX branding with its GeForce RTX series graphics cards to emphasize their capability to accelerate real-time ray tracing. This rendering technique creates more realistic lighting and reflections in computer graphics.

Is the RTX 3050 better than the GTX 1660 Ti?

The RTX 3050 generally offers better performance and features than the GTX 1660 Ti. The RTX 3050 is based on newer architecture, provides better ray tracing capabilities, and typically delivers improved performance in both gaming and creative applications.

Here’s a comparison table highlighting the key differences between the RTX 3050 and the GTX 1660 Ti graphics cards:-

| Specification | RTX 3050 | GTX 1660 Ti |

|---|---|---|

| Architecture | Ampere | Turing |

| CUDA Cores | 2048 | 1536 |

| Tensor Cores | Yes | No |

| Ray Tracing Cores | Yes | No |

| Base Clock | Varies by manufacturer | 1500 MHz |

| Boost Clock | Varies by manufacturer | 1770 MHz |

| VRAM | 4 GB GDDR6 | 6 GB GDDR6 |

| Memory Speed | 12 Gbps | 12 Gbps |

| Memory Interface | 128-bit | 192-bit |

| Memory Bandwidth | 192 GB/s | 288 GB/s |

| TDP | Varies by manufacturer | 120W |

| Recommended PSU | Varies by manufacturer | 450W |

| DirectX Version | 12.2 | 12 |

| OpenGL Version | 4.6 | 4.6 |

| Price | Varies depending on manufacturer and model | Varies depending on manufacturer and model |

Is the RTX 4090 overpriced?

The perception of whether the RTX 4090 is overpriced can vary based on individual opinions and budget considerations.

It is essential to assess the card’s performance, features, and pricing compared to alternative options available in the market before making a purchase decision.

The table below outlines various aspects of whether the RTX 4090 is overpriced:-

| Aspect | Argument in favor | Argument against |

|---|---|---|

| Price | The RTX 4090 is significantly more expensive than other high-end GPUs on the market, which makes it overpriced. | The RTX 4090 offers unmatched performance and features, which justifies its higher price point. |

| Performance | The performance gains over the previous generation (RTX 3090) are relatively modest for the price increase, which makes the RTX 4090 overpriced. | The RTX 4090 offers the most powerful gaming and workstation performance of any GPU currently available, which justifies its higher price point. |

| Availability | The limited availability of the RTX 4090 at launch and ongoing supply constraints make it overpriced due to the inflated prices in the secondary market. | The high demand and limited supply of the RTX 4090 indicate that market forces justify its current price. |

| Use case | The RTX 4090 offers more performance and features than necessary for most users, making it overpriced for their needs. | For users with specific use cases such as deep learning, AI, and high-end gaming, the RTX 4090 offers unparalleled performance and value, which justifies its higher price point. |

Is the RTX 4090 better than the 3090?

The RTX 4090 has more CUDA, Tensor, and RT cores compared to the RTX 3090, and a higher boost clock speed. However, the memory capacity, memory bus width, and TDP are identical between the two cards.

While the RTX 4090 has some advantages in terms of performance, it’s important to note that it’s also likely to be significantly more expensive than the RTX 3090.

Here’s a table comparing the key specifications of the NVIDIA GeForce RTX 4090 and the RTX 3090 graphics cards:-

| Specification | RTX 4090 | RTX 3090 |

|---|---|---|

| Architecture | Ampere | Ampere |

| Process node | 7nm | 7nm |

| CUDA cores | 10752 | 10496 |

| Tensor cores | 336 | 328 |

| RT cores | 84 | 82 |

| Base clock | 1395 MHz | 1395 MHz |

| Boost clock | 2000 MHz | 1700 MHz |

| Memory capacity | 24 GB GDDR6X | 24 GB GDDR6X |

| Memory bus width | 384-bit | 384-bit |

| Memory bandwidth | 912 GB/s | 936 GB/s |

| TDP | 350W | 350W |

What does TI stand for in GPU?

In the context of GPUs, “TI” stands for “Titanium.” NVIDIA uses a suffix to designate higher-performance versions of certain GPU models.

GPUs with a “TI” designation typically offer improved specifications and performance compared to their non-TI counterparts.

Which RTX graphics card is the lowest?

Regarding performance, the NVIDIA GeForce RTX 3050 (or its variants) would generally be considered the lowest-tier RTX graphics card.

However, it’s worth noting that even the lower-tier RTX models offer improved features and capabilities compared to their GTX predecessors.

Is the 2080 considered a high-end GPU?

Yes, the NVIDIA GeForce RTX 2080 (and its variants, such as the 2080 Super and 2080 Ti) is generally considered a high-end GPU. It offers excellent performance and features for gaming and professional applications.

Does ray tracing lower FPS?

Enabling ray tracing can impact FPS (frames per second) performance, requiring additional computational resources. The impact varies depending on the game, scene complexity, and the specific GPU’s ray tracing capabilities.

However, advancements in GPU technology and optimization techniques aim to minimize the performance impact while delivering improved visual quality.

Here’s a table on the topic “Does ray tracing lower FPS?”-

| Question | Answer |

|---|---|

| What is ray tracing? | Ray tracing is a rendering technique that simulates the behavior of light in a virtual environment to create more realistic and accurate images. |

| How does ray tracing affect FPS? | Ray tracing can lower FPS (frames per second) because it requires more computational power to simulate the behavior of light. |

| What hardware is required for ray tracing? | Ray tracing requires a graphics card that supports hardware acceleration for ray tracing, such as an Nvidia RTX series card or an AMD Radeon RX 6000 series card. |

| How much of an FPS drop can you expect with ray tracing? | The amount of FPS drop with ray tracing varies depending on the game, the hardware, and the settings used. It can range from a few frames to a significant drop in performance. |

| Can you turn off ray tracing to increase FPS? | Yes, turning off ray tracing can increase FPS in games that support it. However, the game’s visual quality may be reduced as a result. |

| Is ray tracing worth the FPS drop? | This depends on personal preference. Some gamers value visual fidelity over performance, while others prioritize higher FPS for smoother gameplay. It’s important to find a balance that works for you. |

Can a GTX 1060 run Minecraft with RTX?

No, the GTX 1060 does not have the dedicated RT cores required for real-time ray tracing. Only the RTX series GPUs can enable ray tracing in games like Minecraft with RTX.

Does the GTX 1060 support RTX features?

No, the GTX 1060 does not support RTX features such as real-time ray tracing. RTX features require specific hardware components, such as RT cores, not present in the GTX 1060.

What GPU does NASA use?

For various projects and purposes, NASA employs a range of graphics processing units (GPUs).

NASA has utilized or is presently utilizing a variety of GPUs, as follows:-

NVIDIA Tesla K40:- For activities including simulations, data processing, and machine learning, this GPU was employed in NASA’s Ames Research Center’s Pleiades supercomputer.

NVIDIA Tesla P100:- The Pleiades supercomputer used this GPU for activities like simulations and data analysis.

NVIDIA Tesla V100:- For activities like simulations and machine learning, the Pleiades supercomputer use this GPU.

NVIDIA Quadro P5000:- The Advanced Supercomputing (NAS) Division of NASA employs this GPU for activities like simulations and visualization.

NVIDIA Quadro P6000:- The NAS Division uses this GPU for simulations and visualization-related tasks.

To meet the requirements of various applications and workloads, NASA uses a variety of GPUs. These GPUs are selected based on their strong performance and capacity for handling challenging workloads.

How to choose the best graphics card for you

When selecting a graphics card, the following criteria should be taken into account:-

Budget:– The graphics card cost is, for many individuals, the most significant factor. Choose a spending limit for yourself, then seek a card that falls within it.

Performance:- Think about the genres of games you like and the screen resolution you prefer. More demanding games will run at greater resolutions and frame rates on graphics cards with more horsepower.

Compatibility:– Ensure that your computer’s motherboard and power supply are compatible with the graphics card you chose. Additionally, you will need to ensure that the graphics card will fit in the computer’s chassis.

Brand and Warranty:- Think about the warranty duration and the graphics card brand. A longer warranty can provide peace of mind because some manufacturers are known for making high-quality graphics cards that are less likely to experience issues.

Future Proofing:– You should consider how long you intend to keep the graphics card. Even though it could cost a little bit more, if you intend to retain the card for a long time, you might want to pick one that is more potent and future-proof.

Is RTX more expensive than GTX?

Nvidia’s RTX (short for “Real-Time Ray Tracing”) graphics cards are often more expensive than those based on the GeForce GTX (short for “GeForce GTX”) technology.

This is because RTX graphics cards are more modern and equipped with more sophisticated capabilities, like support for real-time ray tracing and AI-powered image improvement.

Conclusion

In conclusion, the “Most Expensive Graphics Card” stands tall as a pinnacle of technological excellence and sheer power in the world of graphics cards.

As we’ve explored throughout this article, these high-end graphics cards offer unparalleled performance and cutting-edge features that push the boundaries of visual computing.

Investing in the expensive graphics card is not just about owning the latest and greatest technology; it’s about unlocking a new level of gaming, rendering, and creative possibilities.

Whether you’re a hardcore gamer, a professional video editor, or a 3D designer, these top-tier graphics cards can elevate your experience to new heights.

While the price tag of the expensive graphics card may seem daunting, it’s important to consider its long-term benefits and value to your work or play.

With faster rendering times, smoother gameplay, and the ability to handle demanding tasks effortlessly, the investment can improve productivity and provide an immersive visual experience like never before.

Remember, the most expensive graphics card symbolizes innovation and technological prowess, designed to cater to the needs of the most discerning users.

So, to stay ahead of the curve and experience the epitome of graphics performance, consider investing in the most expensive graphics card available today.

In conclusion, the world of graphics cards has witnessed remarkable advancements, and the most expensive graphics card represents the epitome of these achievements.

Embrace the power, embrace the future, and unlock a world of limitless possibilities with the most expensive graphics card at your fingertips.