Have you ever experienced slow internet speeds or connectivity issues using your computer? If so, you may have encountered the term “Microsoft Network Adapter Multiplexor Protocol,” which can be frustrating for many computer users.

Dealing with slow internet speeds can be a huge hindrance, especially if you rely on your computer for work or other important tasks. And when you don’t know what’s causing the issue, it can be even more frustrating.

Fortunately, understanding the Microsoft Network Adapter Multiplexor Protocol can help you identify and resolve any issues related to slow internet speeds on your computer.

In this article, we’ll look closer at this protocol, what it does, and how to troubleshoot any problems you may be experiencing.

So, let’s get started!

What is the Microsoft network adapter multiplexor protocol?💁

Microsoft’s Network Adapter Multiplexor Protocol combines multiple network adapters into a virtual adapter, enhancing network bandwidth and fault tolerance in Windows operating systems.

The protocol creates a virtual adapter that accesses multiple physical adapters, distributing data for increased network bandwidth. If a physical adapter fails, the virtual adapter continues to function with the remaining adapters.

The Network Adapter Multiplexor Protocol is used in high-performance computing environments, such as data centers, where network reliability and bandwidth are critical.

It is also utilized in virtualization environments, like Microsoft Hyper-V, to provide virtual machines with access to multiple physical network adapters.

It is a kernel-mode driver for Network Interface Card (NIC) bonding. By default, the protocol is installed as part of the physical network adapter initialization.

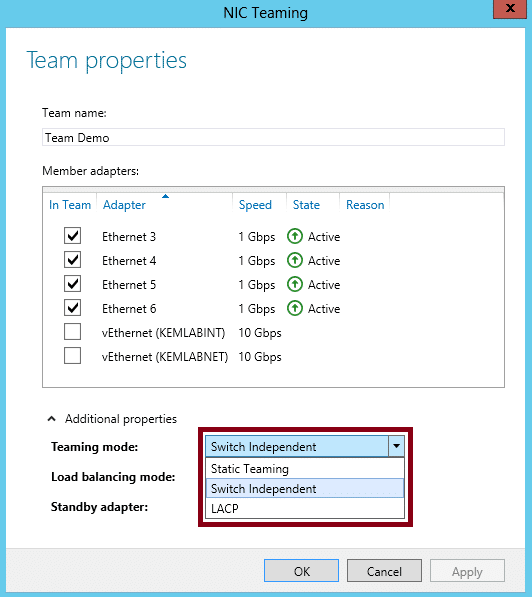

The one essence of this protocol is for NIC teaming. NIC Teaming, often referred to as Load Balancing/Failover (LBFO), allows you to install additional physical Ethernet network adapters (NICs) into your server and “team” or combine them to make one virtual NIC that provides better performance and fault tolerance.

Here’s a table that outlines some key points about the Microsoft Network Adapter Multiplexor Protocol:-

| Question | Answer |

|---|---|

| What is the Microsoft Network Adapter Multiplexor Protocol? | It is a network protocol used to combine multiple network adapters into a single virtual adapter. |

| Do I need the protocol? | It depends on your specific network configuration and needs. |

| When should I use the protocol? | You may want to use the protocol if you have multiple network adapters and want to combine their bandwidth, increase network reliability, or load balance network traffic. |

| What are the potential benefits of using the protocol? | The protocol can improve network performance, provide fault tolerance, and increase network throughput. |

| What are the potential drawbacks of using the protocol? | Using the protocol may add complexity to your network configuration, increase administrative overhead, and potentially cause compatibility issues with certain network hardware or software. |

| How do I enable the protocol? | You can enable the protocol by installing it as a network component and configuring it in the Network and Sharing Center on Windows operating systems. |

| How do I know if the protocol is already installed? | You can check if the protocol is installed by looking for it in the network adapter properties in Device Manager on Windows operating systems. |

| How do I disable the protocol? | You can disable the protocol by removing it as a network component in the Network and Sharing Center on Windows operating systems. |

| Are there any alternatives to using the protocol? | Yes, other ways exist to achieve similar results, such as network teaming or link aggregation. These methods may have different advantages and disadvantages, so choosing the one that best fits your needs is important. |

Do I need Microsoft network adapter multiplexor protocol?

Whether or not you need the Microsoft Network Adapter Multiplexor Protocol depends on your specific networking needs.

If you only have a single network adapter and do not require any of the features provided by the multiplexor protocol, then you may not need it.

On the other hand, if you have multiple network adapters and want to increase network performance or provide fault tolerance, then the multiplexor protocol may be useful.

Here’s a table that summarizes the main points about whether you may need the Microsoft Network Adapter Multiplexor Protocol:-

| Criteria | Need for Microsoft Network Adapter Multiplexor Protocol |

|---|---|

| Multiple network adapters | You may need the Microsoft Network Adapter Multiplexor Protocol if you have multiple network adapters installed on your computer and want to combine their bandwidth. |

| Network speed | If you need faster network speeds for transferring large files or streaming media, using the Microsoft Network Adapter Multiplexor Protocol can help improve performance. |

| Network Infrastructure | If you have a network infrastructure supporting link aggregation or teaming, you may want to use the Microsoft Network Adapter Multiplexor Protocol. |

| Home vs Business use | For home use, the need for the protocol is less likely as most home networks only have one network adapter. For business use, it may be necessary to increase network performance. |

| Compatibility | The protocol is only compatible with certain network adapters and operating systems. Check compatibility before attempting to use it. |

| Skill level | Configuring the Microsoft Network Adapter Multiplexor Protocol can require advanced technical knowledge. Consider seeking professional assistance if you are uncomfortable with making changes to your network configuration. |

How to enable Microsoft network adapter multiplexor protocol

To enable the Microsoft Network Adapter Multiplexor Protocol on your computer, follow these steps:-

Step 1:- Click the Start button and type “ncpa.cpl” in the search box. Press Enter to open the Network Connections window.

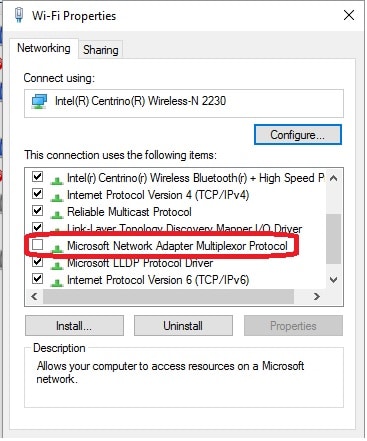

Step 2:- Right-click on the network adapter you want to configure and select Properties.

Step 3:- In the Properties window, click the Install button.

Step 4:- In the Select Network Feature Type window, select Protocol and click the Add button.

Step 5:- In the Select Network Protocol window, select Microsoft from the list of manufacturers and Network Adapter Multiplexor Protocol from the list of network protocols. Click on OK to continue.

Step 6:- After the protocol is installed, return to the Properties window of the network adapter and select the Network Adapter Multiplexor Protocol.

Step 7:- Click the Configure button to open the properties window.

Step 8:– In the properties window, select the Load Balancing option and choose the mode you want to use.

Step 9:- Click OK to save the changes and exit the properties window.

How do I turn off Microsoft Network Adapter Multiplexor protocol?

To turn off the Microsoft Network Adapter Multiplexor protocol, follow these steps:-

- Open the Control Panel by searching for it in the Windows Start menu.

- Click on “Network and Sharing Center.”

- Click on “Change adapter settings” on the left-hand side of the window.

- Right-click on the network adapter that has the Multiplexor protocol enabled and select “Properties”.

- Scroll down and locate “Microsoft Network Adapter Multiplexor Protocol” in the list of items, then uncheck the box next to it.

- Click “OK” to save the changes.

Once you have disabled the Microsoft Network Adapter Multiplexor protocol, it will no longer be active on the selected network adapter.

If you want to re-enable it in the future, repeat the same steps and check the box next to the protocol to enable it again.

Uses of the Microsoft network adapter multiplexor protocol

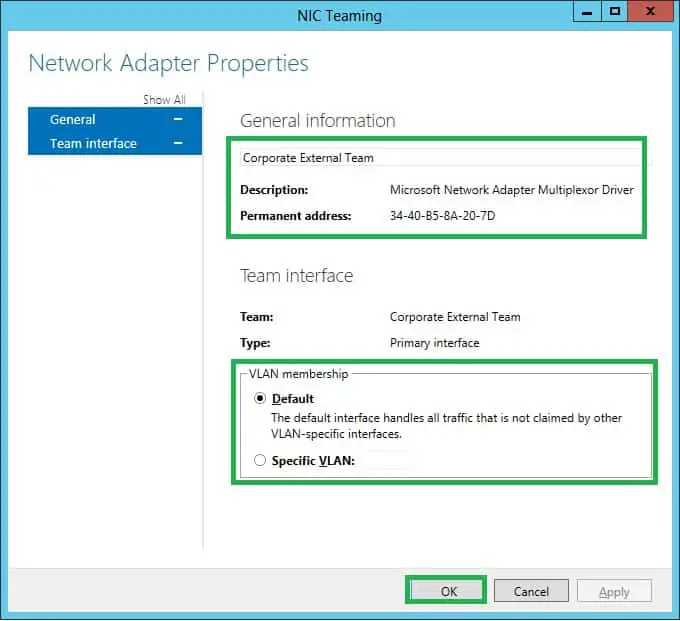

The one time the Microsoft Network Adapter Multiplexor protocol is checked is in the teamed network adapter.

At the same time, it remains unchecked in the physical network adapters that are part of the NIC Teaming.

For example, suppose there are two physical network adapters in a team. In that case, the Microsoft Network Adapter Multiplexor protocol will be disabled for these two physical network adapters and checked in the teamed adapter.

This driver is used for two scenarios in teaming. These scenarios require at least two connected network adapters on a single PC.

Scenario 1 (Adapter teaming): This uses two or more adapters simultaneously. Doing this allows one to send and receive more packets than a single adapter could.

Scenario 2 (Adapter failover/high availability): A standby adapter takes over the network connection if the primary fails.

If you only have one adapter on your PC hooked up, as is the likely case with your client OS enabling this protocol will result in Windows disabling it automatically as soon as it figures out you can’t team multiple adapters to work together on your PC.

📗FAQ’s

Should you turn on Microsoft Network Adapter Multiplexor Protocol?

Enabling the Microsoft Network Adapter Multiplexor Protocol can be useful if you have multiple network adapters and want to improve network performance. However, it is not necessary for all users and may not be compatible with some network configurations.

What is Microsoft Network Adapter Multiplexor Protocol?

Microsoft Network Adapter Multiplexor Protocol is a network protocol that allows multiple network adapters to work together as a single connection. This can improve network performance and redundancy.

What is a network multiplexor?

A network multiplexor is a device or software that combines multiple network connections into a single connection. This can improve network performance and redundancy.

How to disable Microsoft Network Adapter Multiplexor Protocol?

To disable Microsoft Network Adapter Multiplexor Protocol, you can go to the properties of your network adapter and uncheck the box next to “Microsoft Network Adapter Multiplexor Protocol” in the list of installed protocols.

How do I optimize my Ethernet connection?

To optimize your Ethernet connection, you can try several things, such as updating your network adapter drivers, disabling unnecessary network protocols, configuring your network adapter settings for performance, and minimizing network congestion.

Does teaming NICs increase bandwidth?

Yes, teaming NICs can increase bandwidth by combining the bandwidth of multiple network adapters into a single connection. This can improve network performance and redundancy.

What is the difference between a Windows network adapter and a network interface?

A Windows network adapter is a hardware device that connects a computer to a network. A network interface is a software component that allows a computer to communicate with a network. A Windows network adapter requires a network interface to function.

Should I disable ARP offload?

Disabling ARP offload can sometimes improve network performance, especially in older network adapters. However, it may not be necessary or compatible with all network configurations.

It is best to test the performance with and without ARP offload enabled to determine the best option for your network.

Conclusion

After exploring the intricacies of the Microsoft Network Adapter Multiplexor Protocol, it’s clear that this technology plays a crucial role in optimizing network traffic and improving overall network performance.

By allowing multiple network connections to work together as a single connection, the Multiplexor Protocol enables faster data transfer speeds and increased reliability.

However, it’s important to note that the Multiplexor Protocol is not always necessary or appropriate for every network configuration.

As with any technology, it’s essential to evaluate your network’s specific needs and goals before implementing the Multiplexor Protocol.

In conclusion, the Microsoft Network Adapter Multiplexor Protocol can be a valuable tool for optimizing network performance.

Still, it should be used judiciously and with a thorough understanding of its capabilities and limitations.

By staying informed and making informed decisions about your network technology, you can ensure that your network remains secure, reliable, and efficient.