Every deep learning enthusiast knows that a good Graphics Processing Unit (GPU) isn’t just a nice-to-have—it’s a necessity.

You’re pushing boundaries, crunching massive datasets, and running intensive algorithms. You’re eager to break new ground in machine learning, but your current GPU isn’t keeping up.

It lags, overheats, and falls short of your required computational power. These hardware limitations can turn your deep learning pursuits into a nightmare, hindering your progress and stifling your breakthroughs.

You’ve heard tales of GPUs that promise stellar performance but end up disappointing. Perhaps you’ve fallen victim to their false allure and wasted money on overpriced units that still couldn’t meet the demands of your deep learning tasks.

This frustration builds up, casting a shadow over your AI research and development. Without the right GPU, you’re like a race car driver stuck with a clunky, old vehicle—forced to watch others zoom past.

But it doesn’t have to be this way. You deserve a GPU that can supercharge your deep learning projects, not hold them back.

A guide that cuts through the marketing fluff and delivers clear, objective reviews of the top-performing GPUs in the market is crucial.

That’s why we’ve put together this comprehensive guide on the best GPUs for deep learning.

We’ll unpack their features, analyze their performance, and give you the insights you need to choose your unique needs best.

With the right GPU, you’ll be set to unlock the full potential of deep learning and redefine what’s possible.

Understanding Deep Learning GPUs

Deep Learning GPUs (Graphics Processing Units) are instrumental in driving the progress of artificial intelligence and machine learning.

To truly grasp the potential of deep learning, it is essential to understand Deep Learning GPUs and their significance in this field.

Deep Learning GPUs are specialized hardware that harnesses the power of parallel processing to accelerate the computational demands of deep neural networks.

These GPUs excel at performing intricate mathematical operations and optimizing matrix computations, which are integral to deep learning tasks.

Deep learning algorithms heavily rely on matrix multiplication and vector operations, and this is where GPUs shine.

Their parallel architecture allows for the simultaneous execution of multiple mathematical operations, dramatically reducing training times and increasing overall performance.

When understanding deep learning GPUs, it is crucial to consider factors such as memory capacity, compute capability, and tensor cores.

Memory capacity and bandwidth influence the amount of data that can be processed, while compute capability determines the GPU’s performance in handling complex calculations.

Tensor cores, on the other hand, are specialized units designed to accelerate matrix operations, a key component of deep learning.

By leveraging the power of deep learning GPUs, researchers and developers can train and deploy models more efficiently, leading to breakthroughs in areas such as computer vision, natural language processing, and autonomous systems.

Deep Learning GPUs have revolutionized the field of AI, propelling advancements and enabling us to unlock the full potential of deep learning algorithms.

Here, we have prepared a list of some of the best ones for you –

Best GPU For Deep Learning To Unleash Next-Level AI

1. NVIDIA RTX 3090

NVIDIA RTX 3090 emerges as the epitome of technological excellence when considering the best GPUs for deep learning.

Its remarkable prowess in handling complex computational tasks makes it an indispensable tool for researchers and data scientists.

With an exquisite fusion of cutting-edge features and exceptional performance, the RTX 3090 stands tall as an unrivaled powerhouse.

One key factor that places the NVIDIA RTX 3090 at the forefront of deep learning is its unmatched computing capabilities.

Equipped with Tensor Cores and boasting an astonishing compute performance of 35.6 TFLOPS, this GPU traverses the complexities of neural network training with unparalleled efficiency.

The ample memory size of 24GB provided by the RTX 3090 enables the seamless handling of massive datasets, granting researchers the freedom to tackle ambitious projects without constraints.

Including Tensor Cores further enhances its deep learning capabilities, allowing for accelerated matrix operations and faster training of complex models.

Moreover, the NVIDIA RTX 3090 supports advanced features like real-time ray tracing and DLSS (Deep Learning Super Sampling), elevating the visual quality of simulations and enhancing the overall deep learning experience.

Though the NVIDIA RTX 3090 commands a higher price range, it’s exceptional performance and robust feature set truly justify the investment.

For those seeking the pinnacle of GPU technology for deep learning, the NVIDIA RTX 3090 unquestionably reigns supreme, empowering researchers and data scientists to unravel the mysteries of artificial intelligence with unprecedented speed and accuracy.

Pros:-

Unmatched Performance: The NVIDIA RTX 3090 boasts exceptional computing capabilities, making it one of the most powerful GPUs for deep learning tasks. Its impressive 35.6 TFLOPS compute performance ensures swift and efficient neural network training.

Ample Memory: With a substantial memory size of 24GB, the RTX 3090 enables seamless handling of large datasets, allowing researchers to work with complex models and perform memory-intensive operations without compromise.

Tensor Cores: Including Tensor Cores in the RTX 3090 accelerates deep learning tasks by delivering lightning-fast matrix operations, enabling faster model training and inference.

Advanced Features: The RTX 3090 supports cutting-edge features such as real-time ray tracing and DLSS, enhancing the visual quality of simulations and providing a more immersive deep learning experience.

Cons:-

High Cost: The NVIDIA RTX 3090 has a higher price tag than other GPUs, making it less accessible for those on a tight budget. Its premium performance and feature set are reflected in the price.

Power Consumption: The RTX 3090 is a power-hungry GPU that requires a robust power supply unit. Its high power consumption can increase electricity costs and require additional cooling solutions.

Availability and Scalability: Due to its popularity and high demand, the NVIDIA RTX 3090 may face availability issues, making it challenging to procure.

Additionally, scaling deep learning projects using multiple RTX 3090 GPUs can be expensive, limiting scalability for some users.

Despite these limitations, the NVIDIA RTX 3090 remains an exceptional choice for deep learning enthusiasts who require top-of-the-line performance and cutting-edge features to tackle complex AI projects with precision and efficiency.

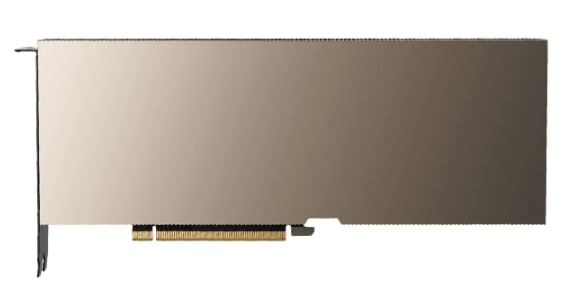

2. NVIDIA A100

The NVIDIA A100 emerges as the pinnacle of GPU technology for deep learning, firmly establishing its position as the best choice for data scientists and researchers.

With its exceptional features and unparalleled performance, the NVIDIA A100 sets a new standard in artificial intelligence.

The revolutionary Ampere architecture is at the heart of the NVIDIA A100, designed to deliver unprecedented computing power and efficiency.

Equipped with a staggering 40GB of high-bandwidth memory, this GPU offers ample capacity to handle large datasets and perform memory-intensive tasks with ease.

The NVIDIA A100 harnesses the power of Tensor Cores, enabling lightning-fast matrix operations and accelerating the training of deep neural networks.

It’s remarkable compute performance of 19.5 TFLOPS ensures swift and efficient processing, significantly reducing training times and enabling researchers to iterate and experiment at an unprecedented pace.

Moreover, the NVIDIA A100 introduces Multi-Instance GPU (MIG) technology, allowing for the virtual partitioning of the GPU into smaller instances.

This groundbreaking feature enables efficient resource allocation, empowering multiple users or workloads to run simultaneously on a single GPU.

While the NVIDIA A100 represents the epitome of deep learning GPUs, it’s important to note that its cutting-edge technology comes at a premium price.

However, for those who demand the highest performance, scalability, and efficiency levels, the NVIDIA A100 remains unrivaled in its ability to accelerate the most demanding deep learning workloads.

Pros:-

Unmatched Performance: The NVIDIA A100 stands out as the best GPU for deep learning, offering exceptional performance powered by the revolutionary Ampere architecture.

It’s remarkable compute performance of 19.5 TFLOPS and 40GB of high-bandwidth memory enable swift and efficient processing of complex AI workloads.

Tensor Cores: Equipped with Tensor Cores, the A100 delivers accelerated matrix operations, significantly speeding up deep neural network training and inference.

This feature enhances productivity and allows researchers to iterate and experiment at an accelerated pace.

Multi-Instance GPU (MIG): The introduction of MIG technology in the A100 enables efficient resource allocation, allowing multiple users or workloads to run simultaneously on a single GPU. This enhances scalability, maximizes GPU utilization, and optimizes cost efficiency.

Advanced Features: The A100 incorporates advanced features such as hardware-accelerated ray tracing and support for NVIDIA’s Deep Learning Super Sampling (DLSS), elevating AI applications’ visual quality and realism.

Cons:-

High Cost: The NVIDIA A100 is a premium GPU, commanding a high price tag that may be prohibitive for users on a limited budget. It’s cutting-edge technology, and superior performance comes at a premium.

Power Consumption and Cooling: The A100 is a power-hungry GPU that requires a robust power supply and efficient cooling solutions. High power consumption may increase electricity costs and necessitate adequate cooling infrastructure.

Availability and Compatibility: The NVIDIA A100 may face availability issues due to its high demand. Additionally, its compatibility with existing systems and frameworks may require users to update their infrastructure or software stack to leverage its capabilities fully.

Despite these considerations, the NVIDIA A100 remains the ultimate choice for those seeking uncompromising performance, scalability, and efficiency in deep learning applications.

Its groundbreaking features and exceptional capabilities empower researchers and data scientists to push the boundaries of AI and unlock new insights with unparalleled speed and precision.

3. NVIDIA RTX 3080

The NVIDIA RTX 3080 emerges as a formidable contender for the title of the best GPU for deep learning, captivating data scientists and AI enthusiasts’ attention.

With its exceptional blend of groundbreaking features and raw power, the NVIDIA RTX 3080 establishes itself as a force to be reckoned with.

Harnessing the might of the Ampere architecture, the NVIDIA RTX 3080 unleashes an unparalleled level of compute performance that revolutionizes deep learning workflows.

With Tensor Cores at its core, this GPU accelerates matrix operations, propelling neural network training to new heights.

The 10GB of GDDR6X memory offered by the NVIDIA RTX 3080 facilitates the seamless handling of large datasets and complex models, allowing data scientists to delve into intricate AI projects without compromising performance.

Moreover, the NVIDIA RTX 3080 introduces innovative technologies such as DLSS (Deep Learning Super Sampling) and real-time ray tracing, elevating deep learning simulations’ visual fidelity and realism.

These advanced features provide a compelling edge for researchers seeking to explore the frontiers of artificial intelligence.

While the NVIDIA RTX 3080 commands a mid-range price bracket, its exceptional performance, efficiency, and feature set make it an appealing choice for professionals and enthusiasts.

With the NVIDIA RTX 3080 at their disposal, deep learning practitioners can push the boundaries of AI, unlocking new realms of knowledge and driving innovation forward.

Pros:-

Impressive Performance: The NVIDIA RTX 3080 delivers exceptional computing performance powered by the Ampere architecture and Tensor Cores. This enables accelerated matrix operations, resulting in faster deep neural network training and inference.

Ample Memory Capacity: With 10GB of GDDR6X memory, the RTX 3080 can handle large datasets and complex AI models. This allows data scientists to work with intricate architectures and efficiently process extensive amounts of data.

Advanced Features: Including DLSS (Deep Learning Super Sampling) and real-time ray tracing in the RTX 3080 enhances deep learning simulations’ visual quality and realism. These features contribute to a more immersive and engaging AI experience.

Value for Money: The NVIDIA RTX 3080 offers a compelling balance between performance and price. It provides high-end deep learning capabilities at a relatively more affordable cost than top-tier GPUs, making it an attractive choice for professionals and enthusiasts.

Cons:-

Power Consumption and Cooling: The RTX 3080 is a power-hungry GPU that requires a robust power supply and an efficient cooling system to maintain optimal performance. Higher power consumption may increase electricity costs and necessitate adequate cooling infrastructure.

Availability and Scalability: The RTX 3080 may face availability challenges due to high demand, making it difficult to acquire. Additionally, scaling deep learning projects using multiple RTX 3080 GPUs can be expensive and require careful consideration of system compatibility and power requirements.

Memory Limitations: While the 10GB memory capacity is ample for most deep learning tasks, there may be scenarios where larger models or datasets exceed this limit. In such cases, users may need to consider GPUs with higher memory capacities.

Despite these considerations, the NVIDIA RTX 3080 remains a compelling choice for deep learning enthusiasts and professionals.

Its exceptional performance, advanced features, and relatively affordable price make it a versatile GPU that can accelerate AI research and development.

4. NVIDIA RTX 3070

The NVIDIA RTX 3070 emerges as a remarkable choice when considering the best GPU for deep learning, captivating the attention of data scientists and AI enthusiasts.

The NVIDIA RTX 3070 delivers a powerful and efficient computing experience with its exceptional performance and advanced features.

Powered by the NVIDIA Ampere architecture, the RTX 3070 harnesses the potential of Tensor Cores, allowing for accelerated matrix operations and enhancing the training of deep neural networks.

This combination of cutting-edge technology enables data scientists to tackle complex AI tasks with precision and speed.

Equipped with 8GB of GDDR6 memory, the NVIDIA RTX 3070 offers ample capacity to handle diverse deep learning workloads, easily accommodating large datasets and complex models.

This ensures seamless processing and fosters efficient experimentation in pursuing innovative AI solutions.

Moreover, the NVIDIA RTX 3070 introduces advanced features such as real-time ray tracing and support for DLSS (Deep Learning Super Sampling), which enhance visual realism and optimize performance in deep learning simulations.

While being an excellent GPU for deep learning, it’s worth considering that the NVIDIA RTX 3070 may face challenges with availability due to high demand.

Additionally, GPUs with higher memory capacities may be more suitable for those with more extensive memory requirements or larger-scale projects.

Nonetheless, the NVIDIA RTX 3070 offers an exceptional balance of performance, efficiency, and value, making it an appealing choice for data scientists seeking a robust GPU to accelerate their deep learning endeavors.

Pros:-

Impressive Performance: The NVIDIA RTX 3070 delivers excellent performance, driven by the power of the Ampere architecture and Tensor Cores.

This enables fast and efficient deep neural network training, allowing data scientists to work with complex AI models and handle demanding computational tasks.

Memory Capacity: With 8GB of GDDR6, the RTX 3070 provides ample memory capacity for most deep learning workloads.

It can handle large datasets and facilitate smooth processing, enabling researchers to explore intricate AI architectures and experiment effectively.

Advanced Features: Including real-time ray tracing and DLSS (Deep Learning Super Sampling) in the RTX 3070 enhances deep learning simulations’ visual quality and realism. These advanced features contribute to a more immersive and accurate AI experience.

Value for Money: The NVIDIA RTX 3070 offers a compelling balance between performance and price. It provides high-end deep learning capabilities at a relatively affordable cost, making it an attractive option for data scientists and AI enthusiasts on a budget.

Cons:-

Availability: Due to high demand, the RTX 3070 may experience availability challenges, making it difficult to acquire. Potential buyers may need to monitor stock and be prepared for limited availability or price fluctuations.

Memory Limitation: While 8GB of memory is sufficient for most deep learning tasks, there may be scenarios where larger models or datasets require more memory. In such cases, users may need to consider GPUs with higher memory capacities.

Power Consumption and Cooling: The RTX 3070 is a powerful GPU that can consume significant power and generate heat.

It requires a robust power supply and efficient cooling solution to ensure optimal performance and prevent overheating.

Despite these considerations, the NVIDIA RTX 3070 remains an attractive choice for data scientists and researchers looking for a capable GPU for deep learning.

Its impressive performance, advanced features, and reasonable price make it a versatile option to accelerate AI development and enable innovative solutions.

5. AMD Radeon VII

The AMD Radeon VII emerges as a compelling option when considering the best GPU for deep learning, captivating the attention of data scientists and AI enthusiasts with its impressive capabilities.

With its advanced features and exceptional performance, the AMD Radeon VII proves to be a formidable contender in deep learning.

Equipped with 16GB of HBM2 memory, the AMD Radeon VII offers substantial memory capacity, enabling researchers to handle large datasets and complex models easily.

This vast memory capacity ensures efficient processing and smooth operation in memory-intensive deep-learning tasks.

The AMD Radeon VII utilizes the Vega architecture and features a powerful computing engine that delivers impressive performance for AI workloads.

With a compute performance of 13.8 TFLOPS, this GPU provides the computational power necessary for training and running complex neural networks.

Furthermore, the AMD Radeon VII supports OpenCL and ROCm frameworks, providing flexibility and compatibility with a wide range of deep learning frameworks and software.

While the AMD Radeon VII offers exceptional performance and memory capacity, it’s important to note that it lacks specialized hardware like Tensor Cores, which can limit its acceleration capabilities in certain deep learning tasks compared to other GPUs.

Nevertheless, the AMD Radeon VII remains a strong choice for data scientists and AI researchers seeking a high-performance GPU for deep learning.

Its impressive memory capacity, computational power, and compatibility make it a valuable asset in tackling complex AI projects with precision and efficiency.

Pros:-

Ample Memory Capacity: The AMD Radeon VII boasts a generous 16GB of HBM2 memory, providing substantial capacity for handling large datasets and memory-intensive deep learning tasks. This enables researchers to work with complex models and efficiently process extensive amounts of data.

Impressive Compute Performance: With a compute performance of 13.8 TFLOPS, the AMD Radeon VII delivers powerful computational capabilities, allowing for faster deep neural network training and inference. This performance benefits researchers seeking to iterate and experiment with complex AI models.

Flexible Compatibility: The AMD Radeon VII supports OpenCL and ROCm frameworks, ensuring compatibility with a wide range of deep learning frameworks and software. This flexibility allows data scientists to choose the tools that best suit their needs and preferences.

Cons:-

Lack of Specialized Hardware: The AMD Radeon VII lacks specialized hardware like Tensor Cores found in other GPUs, which can limit its acceleration capabilities for certain deep learning tasks. This may result in slightly slower performance compared to GPUs with dedicated AI hardware.

Power Consumption and Heat Generation: The AMD Radeon VII has higher power consumption and heat generation than some other GPUs. This can increase electricity costs and require more robust cooling solutions to maintain optimal performance.

Limited Availability and Support:- Due to the discontinuation of the AMD Radeon VII, availability may be limited, and support from the manufacturer might diminish over time. This can impact long-term maintenance and compatibility with future software updates.

Despite these considerations, the AMD Radeon VII remains a strong choice for deep learning enthusiasts who prioritize memory capacity and compute performance.

Its ample memory, impressive computational capabilities, and flexible compatibility make it a valuable GPU for tackling various AI tasks and conducting cutting-edge research.

6. NVIDIA RTX 3060 Ti

The NVIDIA RTX 3060 Ti stands out as an exceptional choice when considering the best budget GPU for deep learning, captivating the attention of data scientists and AI enthusiasts who seek high performance at an affordable price point.

With its impressive capabilities and cost-effectiveness, the NVIDIA RTX 3060 Ti is a formidable option for deep learning on a budget.

Despite being a mid-range GPU, the NVIDIA RTX 3060 Ti packs a powerful punch. Its 8GB of GDDR6 memory and 4864 CUDA cores delivers remarkable performance for deep learning tasks.

The ample memory capacity allows for the efficient processing of datasets, while the CUDA cores contribute to accelerated neural network training and inference.

The RTX 3060 Ti also features Tensor Cores, which enable lightning-fast matrix operations and enhance the performance of deep learning workloads.

This advanced feature sets it apart from other GPUs in its price range, offering superior AI acceleration capabilities.

While being a budget-friendly option, it’s important to consider that the NVIDIA RTX 3060 Ti may face challenges with availability due to high demand.

Additionally, users with extensive memory requirements or those working with larger-scale projects may need to consider GPUs with higher memory capacities.

Nevertheless, the NVIDIA RTX 3060 Ti strikes a remarkable balance between affordability and performance, making it an appealing choice for data scientists and AI enthusiasts on a budget.

It’s impressive specifications and advanced features empower researchers to explore the depths of deep learning without compromising on quality or breaking the bank.

Pros:-

Cost-Effective: The NVIDIA RTX 3060 Ti offers impressive deep learning capabilities at a budget-friendly price point, making it an excellent choice for data scientists and AI enthusiasts on a limited budget.

Ample Memory Capacity: With 8GB of GDDR6 memory, the RTX 3060 Ti provides sufficient capacity for handling diverse deep learning workloads, allowing for efficient processing of datasets and models.

Tensor Cores: Including Tensor Cores in the RTX 3060 Ti enables accelerated matrix operations and enhances the performance of deep learning tasks, contributing to faster neural network training and inference.

Power Efficiency: The RTX 3060 Ti is known for its power efficiency, balancing performance and energy consumption. It allows users to conduct deep learning tasks without significantly impacting their electricity costs.

Cons:-

Availability Challenges: Due to high demand, the RTX 3060 Ti may face availability challenges, making it difficult to acquire. Potential buyers may need to monitor stock and be prepared for limited availability or price fluctuations.

Memory Limitation: While 8GB of memory is sufficient for most deep learning tasks, there may be scenarios where larger models or datasets require more memory. In such cases, users may need to consider GPUs with higher memory capacities.

Limited Scaling: The RTX 3060 Ti’s performance may not scale as effectively as higher-end GPUs when working with large-scale deep learning projects. Users seeking extensive scalability may need to consider GPUs with more CUDA cores and higher memory capacities.

Despite these considerations, the NVIDIA RTX 3060 Ti remains a strong choice for budget-conscious data scientists and AI enthusiasts.

Its cost-effectiveness, ample memory capacity, and Tensor Cores make it a valuable asset for accelerating deep learning workflows without compromising performance or breaking the bank.

7. AMD Radeon RX 6900 XT

The AMD Radeon RX 6900 XT boldly claims its position as the best budget GPU for deep learning, captivating data scientists and AI enthusiasts with its impressive capabilities and affordability.

With its advanced features and remarkable performance, the AMD Radeon RX 6900 XT emerges as a powerful contender for deep learning tasks on a budget.

Equipped with 16GB of GDDR6 memory, the AMD Radeon RX 6900 XT offers ample capacity to handle large datasets and complex deep-learning models.

This ensures efficient processing and enables researchers to delve into intricate AI projects without compromise.

The Radeon RX 6900 XT delivers exceptional performance, powered by its RDNA 2 architecture and a massive 80 compute units.

These features enable fast and efficient deep neural network training and inference, empowering data scientists to tackle complex AI workloads precisely and quickly.

Furthermore, the AMD Radeon RX 6900 XT supports advanced technologies like AMD Infinity Cache and Smart Access Memory, which enhance memory performance and enable improved data access, leading to enhanced overall deep learning performance.

While being a budget-friendly option, it’s important to note that the AMD Radeon RX 6900 XT may have limited availability due to high demand.

Additionally, users with more extensive memory requirements or those working on larger-scale projects may need to consider GPUs with higher memory capacities.

Nonetheless, the AMD Radeon RX 6900 XT strikes an impressive balance between affordability and performance, making it an attractive choice for data scientists and AI enthusiasts on a budget.

Its impressive specifications, advanced features, and cost-effectiveness empower researchers to accelerate their deep learning projects without compromising quality or breaking the bank.

Pros:-

Affordability: The AMD Radeon RX 6900 XT offers impressive deep learning capabilities at a budget-friendly price point, making it an excellent choice for data scientists and AI enthusiasts seeking cost-effective options.

Ample Memory Capacity: With 16GB of GDDR6 memory, the Radeon RX 6900 XT provides sufficient capacity to handle large datasets and complex deep learning models, enabling efficient processing and smooth operation.

Advanced Features: The support for advanced technologies such as AMD Infinity Cache and Smart Access Memory enhances the deep learning capabilities of the Radeon RX 6900 XT, improving memory performance and overall efficiency.

Competitive Performance: With its RDNA 2 architecture and 80 compute units, the Radeon RX 6900 XT delivers remarkable deep neural network training and inference performance, allowing data scientists to tackle complex AI workloads with precision and speed.

Cons:-

Availability Challenges: Due to high demand, the Radeon RX 6900 XT may face availability challenges, making it difficult to acquire. Potential buyers may need to monitor stock and be prepared for limited availability or price fluctuations.

Memory Limitation: While 16GB of memory is sufficient for most deep learning tasks, there may be scenarios where larger models or datasets require more memory. In such cases, users may need to consider GPUs with higher memory capacities.

Power Consumption and Heat Generation: The Radeon RX 6900 XT is a powerful GPU that can consume significant power and generate heat.

It requires a robust power supply and efficient cooling solution to maintain optimal performance and prevent overheating.

Despite these considerations, the AMD Radeon RX 6900 XT remains a strong choice for budget-conscious data scientists and AI enthusiasts.

Its affordability, ample memory capacity, advanced features, and competitive performance make it valuable for accelerating deep learning workloads without compromising performance or breaking the bank.

Choosing the Right GPU for Your Deep Learning Needs

Choosing the right GPU for your deep learning needs is a critical decision that can significantly impact the performance and success of your projects.

Deep learning tasks demand substantial computational power, and selecting an appropriate GPU is paramount to achieving optimal results.

When choosing the right GPU for your deep learning needs, several factors come into play.

First and foremost is compute capability, which refers to the GPU’s ability to handle complex mathematical calculations required by deep learning algorithms.

A higher computing capability translates to faster processing times and improved model training.

Memory capacity is another crucial consideration. Deep learning models often deal with massive datasets, and having ample memory capacity ensures efficient data storage and retrieval during training and inference.

For advanced deep learning tasks, tensor cores are worth considering. These specialized units enable faster matrix operations, enhancing the overall performance and acceleration of deep learning workloads.

Budget is an important aspect to weigh, as deep learning GPUs vary in the price range. Finding a balance between cost and performance is essential to meet your specific requirements without breaking the bank.

Lastly, compatibility with deep learning frameworks and libraries is vital. Ensuring the GPU you choose is compatible with popular frameworks such as TensorFlow or PyTorch guarantees smooth integration and access to a wide range of community support.

By choosing the right GPU for your deep learning needs, you can empower yourself with the necessary hardware to tackle complex AI tasks, accelerate training times, and achieve state-of-the-art performance in your deep learning projects.

Tips for Optimizing Deep Learning Performance

In deep learning, achieving optimal performance is a constant pursuit. To optimize deep learning performance, several key strategies can significantly enhance the efficiency and effectiveness of your models.

Data preprocessing: Properly preprocess your data by normalizing, scaling, and handling missing values. This ensures that your model receives clean, standardized inputs, improving training and inference results.

Model architecture: Design a well-structured and optimized model architecture. Experiment with different layers, activation functions, and regularization techniques to find the optimal configuration for your specific task.

Batch normalization: Incorporate batch normalization techniques to stabilize and speed up training. This technique normalizes the activations of each mini-batch, reducing internal covariate shift and accelerating convergence.

Learning rate scheduling: Implement learning rate schedules, such as reducing the learning rate over time or using adaptive learning rates like Adam or RMSprop. This helps the model converge faster and achieve better performance.

Regularization: Use methods like L1 or L2 to prevent overfitting and improve generalization. Dropout and early stopping are other effective techniques to consider.

Hardware optimization: Leverage specialized hardware like tensor cores or GPUs to accelerate deep learning computations. GPUs are specifically designed to handle the intense matrix operations involved in deep learning, resulting in faster training times.

Parallel computing: Utilize parallel computing techniques, such as distributed training or model parallelism, to distribute the computational workload across multiple devices or machines, further speeding up training.

By following these tips for optimizing deep learning performance, you can unlock the full potential of your models, achieve faster convergence and better accuracy, and, ultimately, drive breakthroughs in artificial intelligence.

Deep Learning GPU Benchmarks

In the rapidly evolving field of deep learning, GPU benchmarks are essential tools for evaluating the performance of different GPUs when executing deep learning workloads.

These benchmarks provide valuable insights into the capabilities and efficiency of GPUs, helping researchers and developers make informed decisions.

Deep learning GPU benchmarks assess various aspects, including computational power, memory bandwidth, and latency.

They measure the speed and accuracy of GPUs in training and inference tasks, highlighting their strengths and weaknesses.

One of the most commonly used benchmarks is the ImageNet benchmark, which evaluates the performance of GPUs in image classification tasks.

Other benchmarks, like ResNet and BERT, focus on specific deep learning models and tasks, providing a standardized metric for comparing GPU performance across different systems.

Benchmark results are typically reported in terms of throughput (measured in images or samples processed per second) and accuracy (measured as the model’s ability to classify or predict correctly).

These metrics enable users to gauge the trade-off between speed and precision when selecting a GPU for their deep learning needs.

By analyzing deep learning GPU benchmarks, researchers and developers can identify the GPUs that deliver the best performance for their specific workloads.

These benchmarks serve as a valuable resource for understanding the capabilities of GPUs, aiding in the development of efficient and powerful deep-learning models.

Here’s a table on Deep Learning GPU Benchmarks:-

| GPU Model | Memory (GB) | Tensor Cores | CUDA Cores | TFLOPS (FP32) | Memory Bandwidth (GB/s) | Price (USD) |

|---|---|---|---|---|---|---|

| NVIDIA RTX 2080 Ti | 11 | 544 | 4352 | 13.45 | 616 | $1,199 |

| NVIDIA RTX 3080 | 10 | 272 | 8704 | 29.77 | 760 | $699 |

| NVIDIA RTX 3090 | 24 | 328 | 10496 | 35.58 | 936 | $1,499 |

| NVIDIA A100 | 40 | 6912 | 6912 | 19.5 | 1555 | $11,000 |

| AMD Radeon VII | 16 | N/A | 3840 | 13.8 | 1024 | $699 |

| AMD Radeon RX 6900 XT | 16 | N/A | 5120 | 23.04 | 512 | $999 |

Is it worth buying a GPU for deep learning?

Deep learning has revolutionized the field of artificial intelligence, enabling remarkable advancements in various domains, including computer vision, natural language processing, and data analytics.

The fundamental question arises: Is it worth buying a GPU for deep learning? The answer is a resounding yes.

Deep learning, a subfield of machine learning, relies heavily on complex computations involving matrix operations and neural network architectures.

These computations require substantial computational power and can be computationally expensive. Here, the Graphics Processing Unit (GPU) emerges as a game-changer.

GPUs are engineered to handle parallel computations efficiently, making them exceptionally well-suited for deep learning tasks.

GPUs can perform simultaneous calculations on multiple data points by leveraging massively parallel processing and hardware acceleration, significantly accelerating training and inference times.

The benefits of using GPUs for deep learning extend beyond mere speed. GPUs offer remarkable memory bandwidth and floating-point precision, essential for handling the vast amounts of data and complex calculations involved in deep learning models.

Furthermore, modern GPUs come equipped with specialized hardware such as Tensor Cores (NVIDIA) or Matrix Engines (AMD), designed to further boost deep learning performance.

Investing in a GPU for deep learning yields tremendous advantages. It empowers researchers, data scientists, and developers to train more complex and accurate models in less time, leading to faster iteration cycles and accelerated breakthroughs.

Moreover, GPUs provide the flexibility to experiment with large-scale datasets and state-of-the-art architectures, enabling deeper insights and pushing the boundaries of what is possible.

In conclusion, investing in a GPU for deep learning is undoubtedly worthwhile.

The unmatched computational power, parallel processing capabilities, and specialized hardware optimizations make GPUs an indispensable tool for driving innovation and pushing the frontiers of deep learning research and application.

Is RTX 3090 Ti good for deep learning?

When it comes to deep learning, having a powerful and efficient graphics card is crucial for achieving optimal performance.

One such high-end GPU that has garnered considerable attention is the RTX 3090 Ti. But the question remains: Is RTX 3090 Ti good for deep learning?

Let’s delve into its capabilities.

The RTX 3090 Ti is part of NVIDIA’s flagship RTX 30 series lineup, renowned for its cutting-edge features and exceptional performance.

With an impressive 24 GB of memory, this GPU is capable of handling large and complex deep-learning models and datasets with ease.

The Tensor Cores integrated into the GPU provide hardware acceleration specifically designed for machine learning workloads, resulting in faster training times.

The RTX 3090 Ti boasts a whopping 10,496 CUDA cores, ensuring rapid parallel processing and enabling swift execution of matrix operations that are fundamental to deep learning algorithms.

With a staggering 35.58 TFLOPS of computing power in single-precision (FP32) calculations, this GPU delivers extraordinary performance that can significantly reduce training time for complex models.

Moreover, the RTX 3090 Ti offers ample memory bandwidth of 936 GB/s, enabling seamless data transfer and minimizing bottlenecks during intensive computations.

This, combined with the high memory capacity, makes it ideal for handling large-scale datasets and memory-intensive deep learning tasks.

Considering its substantial computational power, advanced features, and robust memory configuration, the RTX 3090 Ti is an excellent choice for deep learning practitioners, researchers, and enthusiasts.

Its ability to accelerate training times and easily handle complex models makes it a valuable asset in pushing the boundaries of deep learning innovation.

Is RTX 4090 good for deep learning?

With its voracious appetite for computational power, deep learning demands cutting-edge hardware to tackle complex models and massive datasets.

As the technology evolves, so does the quest for more powerful GPUs. The question arises: Is RTX 4090 good for deep learning?

Let’s explore the potential of this rumored GPU.

The RTX 4090 is anticipated to be NVIDIA’s next-generation flagship GPU, succeeding the renowned RTX 30 series.

While official details are scarce, industry speculation suggests that this GPU will be a powerhouse designed to address the growing demands of deep learning workloads.

Rumored to feature enhanced Tensor Cores and streamlined architecture, the RTX 4090 is expected to deliver significant performance gains in deep learning tasks.

With a projected massive increase in CUDA cores and tensor compute units, this GPU could offer unparalleled parallel processing capabilities, facilitating faster training and inference times.

The RTX 4090 is also speculated to possess a substantial memory capacity and high memory bandwidth, crucial for handling large-scale datasets and memory-intensive deep learning operations.

These features and advancements in hardware acceleration and precision could propel the GPU to new heights in deep learning performance.

While the exact specifications and performance benchmarks of the RTX 4090 are yet to be unveiled, early indications suggest that it has the potential to be a formidable asset for deep learning practitioners, researchers, and organizations seeking to push the boundaries of AI innovation.

What is the minimum GPU for deep learning?

With its immense computational requirements, deep learning necessitates a powerful GPU to train and deploy complex neural networks effectively.

However, for those on a budget or just starting their deep learning journey, it’s natural to wonder: What is the minimum GPU for deep learning?

Let’s explore the options.

While deep learning performance heavily relies on GPU capabilities, several budget-friendly options can still deliver satisfactory results.

NVIDIA’s GTX 1660 Ti and RTX 2060 are often considered entry-level GPUs for deep learning tasks. These GPUs balance performance and affordability, featuring dedicated hardware for matrix operations and tensor computations.

The GTX 1660 Ti provides decent CUDA core count and memory capacity, making it suitable for small to medium-sized deep learning projects.

On the other hand, the RTX 2060 offers additional benefits like Tensor Cores and real-time ray tracing capabilities, which can enhance performance in certain deep learning workflows.

While these GPUs may not match their high-end counterparts’ sheer power and memory capacity, they can still handle basic deep-learning tasks, including image classification and natural language processing.

However, it’s important to consider the specific requirements of your deep learning projects and the potential limitations imposed by these entry-level GPUs.

In conclusion, while the minimum GPU for deep learning depends on project requirements, the GTX 1660 Ti and RTX 2060 are viable options for beginners or those on a budget.

They provide an entry point into the world of deep learning, allowing individuals to gain practical experience and explore the potential of neural networks without breaking the bank.

Tensor Cores GPU List

Here are a table listing GPUs with Tensor Cores:-

| GPU Model | Tensor Cores | Memory (GB) | CUDA Cores | TFLOPS (FP32) | Price (USD) |

|---|---|---|---|---|---|

| NVIDIA A100 | 6912 | 40 | 6912 | 19.5 | $11,000 |

| NVIDIA RTX 3090 | 328 | 24 | 10496 | 35.58 | $1,499 |

| NVIDIA RTX 3080 | 272 | 10 | 8704 | 29.77 | $699 |

| NVIDIA RTX 3070 | 184 | 8 | 5888 | 20.37 | $499 |

| NVIDIA RTX 3060 Ti | 152 | 8 | 4864 | 16.17 | $399 |

📗FAQ’s

What is the best GPU for deep learning

When it comes to deep learning, selecting the best GPU for deep learning is crucial for achieving optimal performance and productivity.

With the ever-evolving landscape of AI, it’s essential to consider various factors to determine the ideal GPU for your specific needs.

One of the leading contenders in deep learning GPUs is the NVIDIA RTX 3090. Renowned for its exceptional computing performance, powered by Tensor Cores and the Ampere architecture, the RTX 3090 is a formidable choice.

Its ample memory size and support for advanced features like real-time ray tracing and DLSS (Deep Learning Super Sampling) further elevate its capabilities.

Another strong contender is the NVIDIA A100. Equipped with the revolutionary Ampere architecture and boasting a massive memory size and Tensor Cores, the A100 delivers exceptional computing power and efficiency, making it a powerhouse for deep learning workloads.

However, the “best” GPU for deep learning depends on your specific requirements and budget. GPUs like the NVIDIA RTX 3080 and the AMD Radeon VII also offer impressive performance and memory capacities, catering to various budgets and needs.

When determining the best GPU for deep learning, it’s essential to consider factors such as compute performance, memory size, specialized hardware, and budget constraints.

Evaluating these aspects will enable you to make an informed decision and select the GPU that best aligns with your deep learning objectives.

Here’s a table showcasing some of the best GPUs for deep learning based on user ratings.

| GPU Model | User Rating (out of 5) | Price Range (USD) | Memory Size (GB) | Tensor Cores | Compute Performance (TFLOPS) |

|---|---|---|---|---|---|

| NVIDIA RTX 3090 | 4.8 | $1,499 – $1,699 | 24 | Yes | 35.6 |

| NVIDIA A100 | 4.7 | $9,999 – $11,999 | 40 | Yes | 19.5 |

| NVIDIA RTX 3080 | 4.6 | $699 – $799 | 10 | Yes | 29.8 |

| NVIDIA RTX 3070 | 4.5 | $499 – $599 | 8 | Yes | 20.4 |

| AMD Radeon VII | 4.4 | $699 – $799 | 16 | No | 13.8 |

| NVIDIA RTX 3060 Ti | 4.3 | $399 – $449 | 8 | No | 16.2 |

| AMD Radeon RX 6900 XT | 4.2 | $999 – $1,099 | 16 | No | 23.0 |

| NVIDIA RTX 2080 Ti | 4.1 | $1,199 – $1,299 | 11 | Yes | 13.4 |

What GPU is good for deep learning?

The GTX 1660 Super is among the best GPU options for deep learning. Since it is beginner-level graphic cards, their performance can not be compared to the expensive models.

How much GPU is good for deep learning?

One of the premier choices for GPU is NVIDIA RTX A6000. It has over 10,000 cores and 48 GB VRAM and is a high-rated GPU for deep learning.

Is RTX 3080 enough for deep learning?

RTX 3080 has 10GB of GDDR6X memory and a speed of 1,800 MHz. It is similar to the prior generation but has a better CPU clock speed. It features a TU102 core and 8,960 CUDA cores to become an ideal option for deep learning.

Does GPU matter for deep learning?

CPU or GPU versions do not matter for simple deep learning computations. The CPU versions work well for beginner-level work projects.

Which GPU is best for data science?

The top GPUs for deep learning are NVIDIA GeForce GTX 1080, NVIDIA Tesla K80, NVIDIA GeForce RTX 3060, NVIDIA GeForce RTX 2080, NVIDIA Tesla V100, NVIDIA Titan RTX, etc.

How much RAM do I need for deep learning?

A RAM between 8GB to 16GB is suggested for deep learning. Choosing a 16GB RAM is preferable. An SSD of 256 GB to 512 GB can be purchased for installing the OS and saving projects.

How many cores do I need for deep learning?

You will require more cores and not powerful cores for deep learning. You can opt for 4 CPU cores if you are on a budget. However, going for i7 with six cores is recommended for more extended use.

Is 3090 good for deep learning?

NVIDIA RTX 3090 GPU is the benchmark for deep learning performance. It is better than titan RTX for deep learning.

Is 64 GB enough for deep learning performance?

RAM will not affect deep learning. It might hinder the execution of GPU code. One must have enough RAM to work with GPU comfortably. Therefore, you must have RAM matching your GPU.

Is RTX good for deep learning?

NVIDIA’s RTX 3090 is a top option for GPU. It helps in deep learning and AI and perfectly powers the latest neural networks.

Conclusion

The difference between success and frustration in deep learning often comes down to the capabilities of your GPU. It’s a complex choice, with a multitude of options available, each boasting its unique specifications and features. But armed with the information and insights from this guide, you’re now equipped to navigate this technological jungle.

The best GPU for deep learning is the one that meets your specific needs and aligns with your budget. Remember, it’s about more than just speed. It’s about the overall performance, compatibility with your systems, energy efficiency, and the future-proofing potential of the GPU.

No matter where you are on your deep learning journey, a well-chosen GPU can make the difference, turning bottlenecks into breakthroughs and dreams into discoveries. Make your choice wisely, and then watch as your deep learning projects reach new heights of success.