In the rapidly evolving landscape of artificial intelligence (AI) and deep learning, researchers and developers often face the challenge of efficiently utilizing GPU resources.

Traditional software deployment methods often lack proper hardware-software integration, resulting in wasted resources and less-than-optimal performance.

The constraints of conventional software deployment methods pose a significant roadblock to unlocking the full potential of your hardware, slowing down the research and development pace and, in turn, the rollout of cutting-edge AI solutions.

It’s not just about underutilized resources – it’s about limiting your ability to innovate and stay ahead in the competitive AI and deep learning landscape.

Enter NVIDIA Containers. These powerful, GPU-optimized containers solve the problem, providing a robust, flexible, and efficient platform for deploying GPU-accelerated applications in AI and deep learning. With NVIDIA Containers, you can ensure seamless hardware-software integration, harnessing the full potential of your GPU resources.

This article will deeply dive into NVIDIA Containers, explore their architecture, benefits, real-world applications, and how they revolutionize AI and deep learning.

What are NVIDIA Containers?

NVIDIA Containers have emerged as a groundbreaking solution in modern computing. NVIDIA, a pioneering force in graphics processing units (GPUs), has spearheaded the development of containers tailored to harness the immense power of their GPUs efficiently and seamlessly.

In essence, NVIDIA Containers are encapsulated units, akin to virtual machines, that incorporate all the essential elements required to execute applications swiftly on NVIDIA GPUs.

These containers enable developers to package their software and all dependencies and libraries, ensuring seamless portability across diverse computing environments. The magic lies in their ability to isolate the application from the underlying infrastructure, guaranteeing consistent performance regardless of the host system.

Docker, the prominent containerization platform, is pivotal in enabling NVIDIA Containers. By integrating the NVIDIA-Docker toolkit, developers can seamlessly deploy GPU-accelerated applications, transcending the barriers of hardware disparities.

The NVIDIA GPU Cloud (NGC) repository further enriches the ecosystem by providing a treasure trove of GPU-optimized containers for myriad applications, such as AI, deep learning, data analytics, and scientific simulations.

In conclusion, NVIDIA Containers represent a paradigm shift in modern computing, empowering developers to harness the raw potential of NVIDIA GPUs efficiently and easily.

As the computing landscape continues to evolve, embracing these cutting-edge containers is pivotal for those seeking to unlock unparalleled performance and efficiency in their GPU-driven applications.

The Architecture of NVIDIA Containers

The world of modern computing is constantly evolving, and NVIDIA Container technology stands at the forefront of innovation, revolutionizing how developers harness the power of NVIDIA GPUs.

Let’s explore this cutting-edge technology in-depth and understand its underlying architecture, benefits, and applications.

At its essence, NVIDIA Container technology is a powerful solution that enables developers to package their applications, along with all dependencies and libraries, into self-contained units called containers.

These containers offer a consistent environment for application execution, regardless of the host system’s configuration, thanks to their unique isolation capability.

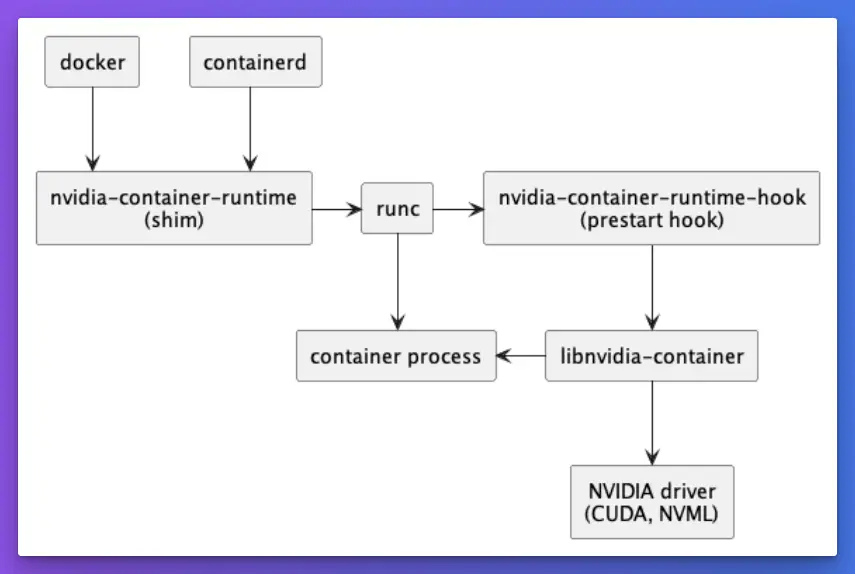

NVIDIA Containers leverage the renowned Docker platform and the NVIDIA-Docker toolkit to integrate GPU acceleration into the container ecosystem seamlessly.

The architecture of NVIDIA Containers comprises three fundamental layers:-

Base Image Layer: This layer forms the foundation and contains the base operating system and essential libraries required to run the application.

NVIDIA CUDA Runtime Layer: At the heart of the container lies the NVIDIA CUDA runtime, which optimally manages GPU resources, enabling smooth execution of GPU-accelerated code.

Application Layer: The topmost layer encapsulates the application and its specific dependencies, ensuring a complete and self-sufficient environment.

NVIDIA Container technology is a game-changer in modern computing, empowering developers to unleash the true potential of NVIDIA GPUs.

With its efficient architecture, seamless integration with Docker, and remarkable portability and performance optimization advantages, NVIDIA Containers are shaping the future of GPU-accelerated applications across diverse industries.

As technology advances, NVIDIA Container technology’s impact and applications are poised to grow, driving innovation and efficiency in the computing landscape.

The Role of NVIDIA Containers in AI and Deep Learning

AI and deep learning algorithms are computationally intensive and require immense parallel processing capabilities. Traditional CPUs fall short of handling such workloads efficiently. This is where GPUs excel, providing a massive boost in performance through parallelism.

NVIDIA’s specialized GPUs, such as those in the NVIDIA A100 series, are specifically designed for AI workloads, boasting thousands of cores to accelerate training and inference tasks.

The Role of NVIDIA Containers:-

NVIDIA Containers play a crucial role in simplifying AI and deep learning workflows:

Consistent Development Environment: With containers, developers can create consistent and reproducible development environments. This eliminates system compatibility issues and ensures the code runs flawlessly from development to production.

Streamlined Deployment: NVIDIA Containers offer a unified deployment experience, enabling seamless transfer of applications between various platforms, including cloud and on-premises servers. This portability is particularly valuable when collaborating on AI projects or scaling multiple servers.

Performance Optimization: Integrating NVIDIA-Docker, NVIDIA’s container toolkit, ensures that GPU-accelerated applications make the most of NVIDIA GPUs’ raw power. This optimization translates into faster training times and more efficient inference in production environments.

Facilitating Collaboration: NVIDIA Containers foster collaboration by allowing data scientists and researchers to package and share their models with colleagues. This sharing of containerized models creates a robust ecosystem for knowledge exchange and community-driven innovation.

Applications of NVIDIA Containers in AI and Deep Learning:-

The applications of NVIDIA Containers in AI and deep learning are far-reaching:

Natural Language Processing (NLP): NLP tasks, such as language translation, sentiment analysis, and text generation, heavily rely on GPUs for training complex models. NVIDIA Containers streamline the deployment of NLP models, enabling rapid experimentation and research.

Computer Vision: In computer vision tasks, like image recognition and object detection, GPUs play a pivotal role in training deep convolutional neural networks. NVIDIA Containers expedite the deployment of computer vision models, making them easily accessible for real-world applications.

Reinforcement Learning: Reinforcement learning, a key area in AI, requires substantial computational resources during training. NVIDIA Containers enable researchers to harness the capabilities of GPUs, accelerating the convergence of reinforcement learning algorithms.

Healthcare and Life Sciences: In fields like medical imaging and drug discovery, where large datasets and complex models are common, NVIDIA Containers enhance the scalability and performance of AI solutions, aiding in disease diagnosis and drug development.

In conclusion, NVIDIA Containers have emerged as a game-changer in AI and deep learning, providing a powerful solution for harnessing the capabilities of NVIDIA GPUs efficiently and effectively.

These containers have accelerated the pace of AI research and application deployment by offering consistent development environments, streamlined deployment, and performance optimization.

As AI continues to advance and shape various industries, NVIDIA Containers will remain a cornerstone technology, enabling researchers and data scientists to push the boundaries of what’s possible in AI and deep learning.

Real-world Applications of NVIDIA Containers

NVIDIA Containers have transcended the realm of innovation and theory, significantly impacting real-world applications across diverse industries.

Leveraging the power of NVIDIA GPUs and the versatility of containerization, these technological marvels have transformed how various domains approach complex computations and resource-intensive tasks.

Let’s delve into some compelling real-world applications of NVIDIA Containers.

1. Healthcare and Medical Imaging

In healthcare and medical imaging, NVIDIA Containers have emerged as a game-changer. From MRI and CT scan analysis to disease diagnosis and tumor detection, processing vast medical datasets demands substantial computational power.

Combined with containerized applications, NVIDIA GPUs expedite image analysis and enable medical professionals to arrive at accurate diagnoses swiftly. This has resulted in enhanced patient care and improved outcomes.

2. Autonomous Vehicles

The burgeoning field of autonomous vehicles relies heavily on AI and deep learning algorithms. NVIDIA Containers offer a robust solution for developing and deploying sophisticated AI models that power the decision-making capabilities of self-driving cars.

With real-time data processing and on-the-fly updates facilitated by these containers, autonomous vehicles can navigate complex urban environments and highways safely and efficiently.

3. Finance and Risk Assessment

In the financial industry, accurate risk assessment and predictive modeling are paramount. NVIDIA Containers accelerate complex computations involved in risk analysis, portfolio optimization, and algorithmic trading.

By harnessing the computational prowess of GPUs, financial institutions gain a competitive edge with faster and more accurate predictions, enhancing their decision-making processes.

4. Natural Language Processing (NLP)

NLP has become a cornerstone of modern AI applications, including language translation, sentiment analysis, and chatbots. NVIDIA Containers expedite developing and deploying NLP models, enabling businesses to extract valuable insights from vast amounts of text data swiftly. This has applications in customer service, market research, and content analysis.

5. High-Performance Computing (HPC)

The scientific community benefits immensely from the application of NVIDIA Containers in HPC. Complex simulations, climate modeling, and molecular dynamics simulations demand high computational capabilities.

NVIDIA GPUs and containerized applications allow researchers to tackle grand challenges and accelerate scientific discoveries in physics, chemistry, and engineering.

6. Data Science and Machine Learning

Data science and machine learning applications often process massive datasets and train intricate models. NVIDIA Containers facilitate seamless scaling across distributed systems, enabling data scientists to expedite model training and inference, leading to more robust AI solutions for businesses.

7. Video Analytics and Surveillance

In video analytics and surveillance, real-time processing of video feeds is essential for detecting anomalies and ensuring public safety. NVIDIA Containers equipped with GPU-accelerated algorithms enable rapid video data analysis, making it possible to identify potential threats and enhance security measures.

The real-world applications of NVIDIA Containers span a broad spectrum of industries, revolutionizing how businesses and researchers approach complex tasks.

From healthcare to finance, from autonomous vehicles to scientific simulations, NVIDIA Containers have unleashed the true potential of NVIDIA GPUs, transforming industries and driving innovation.

As technology advances, the role of NVIDIA Containers is only set to grow, shaping the future of AI-driven applications and pushing the boundaries of what’s possible in computing.

Comparison of NVIDIA Containers with other container solutions in the market

Let’s comprehensively compare NVIDIA Containers with other container solutions available in the market.

Comparing with Docker Containers:-

Docker Containers are the foundation of modern containerization. While Docker offers excellent portability and ease of use, it lacks the native ability to harness the power of GPUs efficiently.

NVIDIA Containers address this limitation by seamlessly integrating with Docker through NVIDIA-Docker, unlocking the full potential of NVIDIA GPUs for GPU-accelerated applications.

Comparing with Singularity Containers:-

Singularity Containers are renowned for their focus on high-performance computing and scientific simulations. Unlike Docker, Singularity containers are designed for secure execution in shared environments.

While Singularity containers excel in scientific research, they do not provide the same level of performance optimization for GPU-accelerated applications as NVIDIA Containers do.

Compared with Kubernetes:-

Kubernetes is a popular container orchestration platform enabling the management of containerized applications at scale. While Kubernetes excels in orchestrating container workloads across clusters, it requires additional configurations to optimize GPU utilization effectively. NVIDIA Containers, with their native GPU support, integrate seamlessly with Kubernetes, simplifying the deployment of GPU-accelerated workloads.

Compared with OpenShift:-

OpenShift, a Kubernetes-based platform, offers additional features for enterprises, such as security, monitoring, and automation. However, similar to Kubernetes, it requires custom configurations for GPU support. NVIDIA Containers, with their native GPU optimization, complement OpenShift, ensuring efficient GPU utilization without complex setups.

Comparing with Podman:-

Podman is an open-source container management tool that allows running containers without a daemon, offering increased security and compatibility with Docker. However, like Docker, it requires NVIDIA-Docker integration to utilize NVIDIA GPUs fully. With their pre-built NVIDIA-Docker toolkit, NVIDIA Containers provide a more streamlined and efficient solution for GPU-accelerated applications.

Comparing with LXC (Linux Containers):-

LXC is a lightweight virtualization solution at the operating system level. While it offers low overhead and fast startup times, it lacks native GPU support. NVIDIA Containers stand out with their GPU acceleration capabilities, enabling developers to exploit the raw power of NVIDIA GPUs effortlessly.

In conclusion, the comparison reveals that NVIDIA Containers bring a unique value proposition to the market. While other container solutions like Docker, Singularity, Kubernetes, OpenShift, Podman, and LXC excel in various aspects of containerization, NVIDIA Containers stand out with their native GPU support, providing unmatched performance optimization for GPU-accelerated applications.

By seamlessly integrating with popular container technologies and offering specialized GPU resources, NVIDIA Containers have become a go-to solution for industries and researchers seeking to harness the full potential of NVIDIA GPUs efficiently and effectively.

As the demand for GPU-accelerated applications continues to grow, NVIDIA Containers are poised to play an increasingly vital role in the future of containerization and high-performance computing.

Steps to Set Up NVIDIA Containers

In modern computing, harnessing the immense power of NVIDIA GPUs through NVIDIA Containers has become essential for high-performance applications.

Setting up NVIDIA Containers is a streamlined process allowing developers to package their applications and GPU optimization for seamless deployment and execution.

Let’s explore the steps to set up NVIDIA Containers and unlock the full potential of NVIDIA GPUs.

Step 1: Verify GPU Compatibility

Before setting up NVIDIA Containers, ensure your system meets the GPU compatibility requirements. NVIDIA Containers require a system with an NVIDIA GPU and a supported driver version. Visit the NVIDIA website to verify compatibility and install the appropriate GPU driver.

Step 2: Install Docker

To start with NVIDIA Containers, you must install Docker on your system. Docker provides the foundation for containerization, and NVIDIA Containers integrate seamlessly with Docker to optimize GPU resources. Install Docker by following the official Docker installation guides for your operating system.

Step 3: Install NVIDIA-Docker

The next crucial step is to install NVIDIA-Docker, the toolkit that enables communication between Docker and the NVIDIA GPU. NVIDIA-Docker acts as a bridge, allowing Docker containers to access the GPU resources.

The NVIDIA-Docker installation process varies depending on your operating system, so refer to the official NVIDIA-Docker documentation for step-by-step instructions.

Step 4: Pull NVIDIA GPU-Accelerated Images

Once you have Docker and NVIDIA-Docker set up, it’s time to access the plethora of GPU-accelerated containers available in the NVIDIA GPU Cloud (NGC) repository.

NGC offers a rich collection of pre-built containers optimized for AI, deep learning, and scientific applications. Pull the desired NVIDIA GPU-accelerated images from NGC using the provided tags and commands.

Step 5: Create Custom Dockerfiles (Optional)

If you have specific requirements or need to build custom applications, you can create your Dockerfiles. Dockerfiles are text files that contain instructions for building Docker images. Incorporating NVIDIA-Docker commands into your Dockerfile ensures GPU support for your custom applications.

Step 6: Run NVIDIA Containers

With the necessary images or custom Dockerfiles prepared, it’s time to run the NVIDIA Containers. Use the Docker run command and NVIDIA-Docker options to launch the containers. The NVIDIA-Docker toolkit will direct the containerized application to the GPU resources, maximizing performance.

Step 7: Monitor and Optimize

Once the NVIDIA Containers are up and running, monitoring their performance and resource utilization is crucial. Tools like NVIDIA System Management Interface (nvidia-smi) allow you to monitor GPU usage and performance metrics. Analyze the performance data to optimize GPU resource allocation and fine-tune your containerized applications for maximum efficiency.

Step 8: Scale and Deploy

As your containerized applications grow and demand more computational power, you may need to scale your setup. Docker and NVIDIA-Docker are designed to facilitate easy scaling across multiple servers and cloud environments.

Use container orchestration platforms like Kubernetes or Docker Swarm to efficiently deploy and manage container clusters.

Setting up NVIDIA Containers is a straightforward process that brings immense value to high-performance computing and GPU-accelerated applications.

By following these steps, developers can seamlessly harness the raw power of NVIDIA GPUs, ensuring optimized performance and scalability. Integrating NVIDIA Containers with Docker and the NVIDIA-Docker toolkit paves the way for transformative advancements in AI, deep learning, and scientific research.

As the demand for GPU-accelerated applications continues to grow, mastering the setup and deployment of NVIDIA Containers becomes crucial for developers and researchers aiming to unlock their applications’ full potential.

Tips For Troubleshooting common issues

1. Nvidia container high cpu usage

NVIDIA Containers have revolutionized the world of high-performance computing, enabling developers to harness the raw power of NVIDIA GPUs efficiently.

However, encountering high CPU usage in NVIDIA Containers can hinder performance and affect the smooth execution of GPU-accelerated applications. This article will explore the steps to troubleshoot and resolve high CPU usage in NVIDIA Containers.

Step 1: Check GPU Utilization

The first step in troubleshooting high CPU usage is verifying the containerized application’s GPU utilization. High CPU usage might be a symptom of an issue with the GPU or how the application utilizes GPU resources. Use NVIDIA System Management Interface (nvidia-smi) to monitor GPU utilization and ensure that the GPU is being utilized as expected.

Step 2: Review Application Code and Algorithms

Examine the code and algorithms used in the containerized application. In some cases, poorly optimized or inefficient algorithms can lead to high CPU usage. Consider optimizing the code and algorithms to utilize the GPU’s parallel processing capabilities better, thereby reducing the CPU workload.

Step 3: Update NVIDIA GPU Drivers

Outdated or incompatible GPU drivers can cause compatibility issues, leading to high CPU usage. Ensure you use the latest NVIDIA GPU drivers compatible with the containerized application and the host system.

Step 4: Check Container Resource Allocation

Inspect the resource allocation of the NVIDIA Container. Inadequate allocation of GPU resources can result in the CPU compensating for the GPU’s workload, leading to high CPU usage. Adjust the resource allocation using tools like Docker Compose or Kubernetes resource limits, to ensure proper GPU utilization.

Step 5: Monitor System Metrics

Utilize system monitoring tools to keep track of various performance metrics, such as CPU usage, memory utilization, and GPU performance. This can provide insights into potential bottlenecks and help identify the root cause of high CPU usage in the NVIDIA Container.

Step 6: Optimize Containerized Application

Review the application within the NVIDIA Container for any inefficiencies that might be causing high CPU usage. Consider optimizing the application’s resource utilization and parallel processing capabilities to offload more tasks to the GPU, reducing the CPU workload.

Step 7: Analyze Background Processes

Background processes or services running within the containerized environment might consume additional CPU resources. Check for any unnecessary background processes and eliminate them to free up CPU capacity.

Step 8: Investigate Container Configuration

Examine the configuration of the NVIDIA Container itself. Certain container configurations, like incorrect runtime settings or incompatible images, can lead to high CPU usage. Verify the configuration against best practices and ensure the underlying hardware and software compatibility.

Step 9: Check for Resource Contentions

Resource contention between containers or other processes on the host system can impact CPU performance. Analyze the overall system resource utilization and investigate if other containers or processes consume excessive resources, leading to CPU contention.

Step 10: Seek Community Support and Forums

If you cannot pinpoint the cause of high CPU usage, consider seeking support from the NVIDIA community and relevant forums. Experienced developers and users might have encountered similar issues and can offer valuable insights and solutions.

Troubleshooting high CPU usage in NVIDIA Containers requires a systematic approach, from examining GPU utilization to optimizing the containerized application and its configuration.

By carefully investigating the underlying factors contributing to high CPU usage and applying the appropriate fixes, developers can ensure optimal GPU-accelerated performance and unleash the true potential of NVIDIA GPUs in their applications.

Developers can create a seamless and efficient GPU computing environment with NVIDIA Containers through proactive troubleshooting and continuous refinement.

| Problem | Possible causes | Solutions |

|---|---|---|

| High CPU usage in Nvidia container | 1. Outdated driver or software | 1. Update the Nvidia driver or software to the latest version. |

| 2. High GPU utilization | 2. Decrease the workload on the GPU by optimizing the application or reducing the resolution or frame rate. | |

| 3. Overheating GPU | 3. Improve the cooling system by adding more fans or liquid cooling. | |

| 4. Inefficient algorithms or code | 4. Optimize the algorithms or code to reduce the workload on the GPU or CPU. | |

| 5. Background processes consuming CPU | 5. Identify and terminate any unnecessary background processes that consume CPU resources. | |

| 6. Insufficient RAM or memory allocation | 6. Increase the RAM or memory allocation to the application to reduce the amount of data swapping between the CPU and GPU. | |

| 7. Insufficient CPU processing power | 7. Upgrade the CPU to a faster or more powerful model to handle the workload more efficiently. | |

| 8. Multiple containers running on the same node | 8. Spread the workload across multiple nodes or use resource limits to ensure each container has sufficient resources. | |

| 9. Network I/O bottleneck | 9. Check for any network I/O bottlenecks causing high CPU usage and optimize the network configuration. |

2. Nvidia Container’s high GPU usage

There could be several reasons for this if you’re experiencing high GPU usage when running Nvidia containers.

If experiencing high GPU usage with Nvidia containers, possible reasons include outdated Nvidia driver versions, improper container configuration, unoptimized container workloads, and insufficient resource limits.

Here are some troubleshooting steps you can try:

Check your Nvidia driver version:- Ensure you have the latest Nvidia driver installed on your system. You can check this by running the command nvidia-smi in a terminal.

Check container resource limits:- Ensure that your container has resource limits set, such as CPU and memory limits. If the container uses too many GPU resources, it could be because these other resources are not limiting it.

Check container workload:- Verify the workload running in the container, as some machine learning tasks can be very GPU-intensive. Ensure that the workload is optimized and not doing unnecessary computations.

Check container configuration:- Check the container configuration to ensure it is properly configured to use the GPU. This can include setting environment variables or mounting the correct devices in the container.

Monitor GPU usage:- Use tools like nvidia-smi or nvtop to monitor the GPU usage of the container and identify any potential issues.

Update container runtime:- If you’re using Docker, ensure you have the latest version of the Docker runtime and the Nvidia container toolkit.

Use the Nvidia System Management Interface (nvidia-smi) to identify which process consumes the most GPU resources.

Here is a table outlining some potential causes and solutions for high GPU usage in Nvidia containers:-

| Cause | Solution |

|---|---|

| Overprovisioning of GPU resources | Allocate resources more efficiently, consider using Kubernetes to manage container resources |

| Insufficient GPU memory | Adjust batch size, reduce image resolution, or increase GPU memory |

| Inefficient use of parallelization | Optimize code to take better advantage of GPU parallelization |

| High CPU usage | Optimize code to minimize CPU usage, consider using GPUs for CPU-bound tasks |

| External factors (e.g. network latency) | Investigate external factors and optimize as necessary |

| Driver issues | Ensure the latest Nvidia drivers are installed and properly configured |

3. Nvidia container high disk usage

High disk usage in NVIDIA containers can be caused by several factors, including logs and temporary files generated by the containers, large image sizes, or running too many containers simultaneously.

Here are a few steps you can take to try to address the issue:

Check container logs:- Run docker logs <container-name> Check the logs for each container and see if any errors or warnings might indicate the cause of the high disk usage.

Remove unused containers and images:- Use the docker container prune and docker image prune commands to remove unused containers and images.

Limit container resources:– Use the --cpu-shares and --memory options when running containers to limit their resource usage.

Use smaller base images:- If you’re using custom images as a base for your containers, consider using smaller base images to reduce the overall size of your containers.

Enable log rotation:- Configure log rotation for your containers to limit the size of the logs generated by each container.

Use a storage driver with better performance:– If you’re using Docker, consider switching to a storage driver with better performance, such as overlay2 or aufs.

here’s a table on Nvidia container high disk usage:-

| Problem | Cause | Solution |

|---|---|---|

| High disk usage in Nvidia container | Container logs or temporary files taking up space | 1. Remove unnecessary files from the container 2. Use a larger storage volume for the container 3. Configure log rotation to prevent logs from filling up disk space |

| High disk usage in host machine due to Nvidia container | Container writing files to the host machine | 1. Check container configuration for volume mounting 2. Modify container configuration to avoid writing to the host machine 3. Move container to a separate storage device or network share to isolate disk usage |

| Performance degradation due to high disk usage | Limited disk space causing slower read/write operations | 1. Optimize container to reduce disk usage 2. Increase disk space available for the container 3. Improve disk performance through hardware upgrades or configuration changes |

| Data loss due to disk failure | No redundancy or backup strategy in place | 1. Implement backup strategy for important data 2. Use RAID or other redundancy methods to prevent data loss 3. Monitor disk health and replace failing disks in a timely manner |

The Future of NVIDIA Containers

As technology advances rapidly, the future of NVIDIA Containers holds immense promise in shaping the landscape of high-performance computing, artificial intelligence, and deep learning.

These powerful encapsulated units have already revolutionized how developers harness the power of NVIDIA GPUs, but their journey has just begun.

Let’s explore the potential future developments and applications of NVIDIA Containers.

1. Advancements in GPU Technology

As NVIDIA continues to push the boundaries of GPU technology, we can expect future generations of GPUs to be even more powerful and efficient. The future of NVIDIA Containers will undoubtedly leverage these advancements, enabling developers to explore new frontiers in AI research, complex simulations, and data analytics.

2. Broader Adoption Across Industries

The future of NVIDIA Containers is poised for broader adoption across industries. As businesses realize the benefits of GPU acceleration in their applications, they will increasingly turn to NVIDIA Containers to optimize performance and gain a competitive edge. Industries like healthcare, finance, autonomous vehicles, and more will harness the potential of NVIDIA Containers to drive innovation and efficiency.

3. Enhanced Container Orchestration

Container orchestration platforms like Kubernetes and Docker Swarm will continue to evolve, seamlessly integrating with NVIDIA Containers. This will simplify the deployment and management of GPU-accelerated workloads across distributed systems, enabling more efficient scaling and resource allocation.

4. Collaboration and Community-Driven Innovation

The NVIDIA community will play a pivotal role in shaping the future of NVIDIA Containers. Collaboration among developers, data scientists, and researchers will create an extensive repository of containerized applications, further accelerating the adoption of NVIDIA Containers in diverse domains.

5. Customization and Personalization

The future of NVIDIA Containers will likely see increased customization and personalization. Developers can fine-tune their containers to match their application requirements, optimizing resource utilization and performance.

6. Interoperability and Multi-GPU Support

As GPU clusters and multi-GPU systems become more common, the future of NVIDIA Containers will emphasize improved interoperability and support for multiple GPUs. This will allow developers to leverage the full power of GPU clusters and scale their applications efficiently.

7. Expanding Use Cases in AI and Deep Learning

AI and deep learning will continue to be at the forefront of innovation, and NVIDIA Containers will be integral to advancing these fields. From natural language processing to computer vision, NVIDIA Containers will enable researchers and data scientists to tackle complex problems and unlock new insights.

8. Seamless Integration with Cloud Services

With the growing popularity of cloud computing, the future of NVIDIA Containers will see seamless integration with major cloud service providers. This will empower businesses to access NVIDIA GPU acceleration on-demand without requiring extensive infrastructure investments.

In conclusion, the future of NVIDIA Containers is bright and brimming with possibilities. With advancements in GPU technology, broader adoption across industries, enhanced container orchestration, and community-driven innovation, NVIDIA Containers will continue to be a driving force in high-performance computing.

As AI, deep learning, and data-intensive applications become increasingly prevalent, NVIDIA Containers will play a pivotal role in shaping the future of computing, enabling developers and researchers to explore new horizons and unlock the full potential of NVIDIA GPUs in their applications.

In cutting-edge technology, NVIDIA Share Process has emerged as a game-changing solution for enhancing collaboration and efficiency among developers, researchers, and data scientists.

This innovative process, developed by NVIDIA, aims to optimize the sharing and utilization of computational resources, particularly NVIDIA GPUs, to pursue groundbreaking advancements in high-performance computing and artificial intelligence.

The NVIDIA Share Process is a revolutionary approach that allows multiple users to share the resources of an NVIDIA GPU seamlessly. Traditionally, GPU resources were confined to a single user, leading to inefficient utilization and prolonged wait times.

However, the NVIDIA Share Process effectively partitions the GPU resources, enabling multiple users to work simultaneously without compromising performance.

The NVIDIA Share Process utilizes a sophisticated resource scheduling and isolation mechanism. When multiple users access the same GPU, the process intelligently allocates the GPU’s processing power and memory resources to each user’s tasks. This dynamic allocation ensures that each user receives a fair share of the GPU’s capabilities, enabling smooth and efficient execution of their workloads.

The NVIDIA Share Process brings a plethora of benefits, including:

Enhanced Collaboration: With the NVIDIA Share Process, researchers and developers can seamlessly collaborate on shared GPU resources. This collaborative environment fosters innovation and accelerates the pace of discovery.

Resource Optimization: By intelligently managing GPU resources, the NVIDIA Share Process maximizes the utilization of available hardware, reducing idle time and ensuring optimal performance for all users.

Reduced Wait Times: In scenarios where GPU resources were previously scarce, the NVIDIA Share Process significantly reduces wait times, allowing users to execute their tasks without delays.

Multi-Tenant Environments: The NVIDIA Share Process is particularly valuable in multi-tenant environments, such as data centers or cloud computing platforms, where multiple users access the same GPU infrastructure.

Efficient GPU Utilization: The process ensures that the GPU is efficiently utilized, improving the overall throughput and return on investment for GPU-intensive tasks.

The NVIDIA Share Process finds widespread applications in various domains:

AI and Deep Learning: In AI research and deep learning training, the NVIDIA Share Process enables multiple data scientists to train their models concurrently, fostering collaboration and accelerating model development.

High-Performance Computing: For scientific simulations and complex computations, the NVIDIA Share Process optimizes the usage of GPU resources, empowering researchers to tackle grand challenges efficiently.

Cloud Computing: In cloud-based GPU offerings, the NVIDIA Share Process ensures fair allocation of GPU resources to different users, delivering a seamless and efficient user experience.

In conclusion, the NVIDIA Share Process is a groundbreaking advancement in high-performance computing. By enabling efficient sharing and utilization of NVIDIA GPU resources, this innovative approach fosters collaboration, reduces wait times, and maximizes the efficiency of GPU-intensive tasks.

As technology advances, the NVIDIA Share Process is poised to become indispensable in pursuing cutting-edge research, AI advancements, and scientific breakthroughs.

With its ability to revolutionize multi-tenant GPU environments and streamline resource allocation, the NVIDIA Share Process is a testament to NVIDIA’s commitment to pushing the boundaries of innovation in computing.

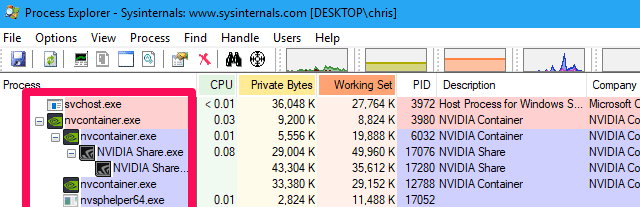

What is Nvidia container in task manager?

Users who open the Task Manager on their Windows systems might encounter processes labeled NVIDIA Container. But what exactly is NVIDIA Container, and what role does it play in the system?

NVIDIA Container is a background process that is associated with NVIDIA GPU drivers.

It is a vital component of the NVIDIA software stack, facilitating communication between the operating system and NVIDIA GPUs. These containers act as intermediaries, allowing applications and services to access the NVIDIA GPU’s computational capabilities seamlessly.

NVIDIA Containers in the Task Manager indicate that NVIDIA GPU drivers are installed and functioning correctly. These containers are crucial in optimizing GPU resource allocation, ensuring efficient utilization of the GPU’s parallel processing capabilities.

When GPU-accelerated applications are executed on the system, NVIDIA Containers kick into action, managing the interaction between the application and the GPU. They enable the application to offload computationally intensive tasks to the GPU, accelerating processing times and enhancing overall performance.

One of the key benefits of NVIDIA Containers is that they isolate GPU resources for individual applications. This isolation prevents conflicts between applications and ensures that each application receives the appropriate share of GPU power, preventing one application from monopolizing the GPU and causing performance issues for others.

Furthermore, NVIDIA Containers contribute to a stable and reliable computing environment. They help manage system resources efficiently, preventing GPU-related crashes or conflicts that could otherwise occur when multiple GPU-accelerated applications are running simultaneously.

In conclusion, NVIDIA Container is a critical background process associated with NVIDIA GPU drivers. When seen in the Task Manager, it signifies that NVIDIA GPUs are functioning correctly and available for GPU-accelerated applications.

These containers are pivotal in managing GPU resources, enabling efficient utilization and isolation of GPU capabilities for different applications. As the demand for GPU-accelerated applications grows, NVIDIA Containers will remain an integral part of the NVIDIA software stack, ensuring optimal performance and a seamless user computing experience.

Why Nvidia container is using your microphone?

Discovering that the NVIDIA Container is utilizing your microphone might raise eyebrows and prompt concerns about privacy and security. However, it is essential to understand the rationale behind this seemingly unexpected behavior.

Using your microphone by the NVIDIA Container does not indicate any malicious intent or privacy breach. Instead, NVIDIA introduced a deliberate functionality to enhance user experience and enable seamless voice interactions in specific applications.

NVIDIA’s Voice Assistant Integration is a feature that allows users to interact with AI-driven applications using voice commands. By leveraging the microphone, NVIDIA Containers enable users to issue voice prompts to AI models for tasks like natural language processing, speech recognition, and voice synthesis.

This capability is particularly valuable in applications like voice assistants, speech-to-text, and language translation services. For instance, AI-based language translation services can accept voice input, process it within the NVIDIA Container, and provide accurate translations in real time.

It is crucial to note that microphone usage is entirely optional and requires user consent. Users can enable or disable microphone access for NVIDIA Containers, depending on their preferences and comfort level.

NVIDIA prioritizes user privacy and implements robust security measures to safeguard sensitive data. Microphone recordings are typically processed locally within the NVIDIA Container and do not leave the user’s system unless explicitly permitted for specific use cases, such as cloud-based voice recognition services.

In conclusion, the presence of the NVIDIA Container utilizing your microphone is a deliberate design choice to enable voice interaction capabilities in AI-driven applications.

It is a testament to NVIDIA’s commitment to enhancing user experience and providing innovative solutions. By offering user control and transparency, NVIDIA ensures that users can harness the full potential of AI technologies while maintaining the highest standards of privacy and security.

Why is Nvidia container using so much GPU?

The NVIDIA Container utilizing a significant portion of the GPU’s processing power might raise concerns about performance and resource allocation. However, there are valid reasons for this behavior.

GPU Intensive Applications: NVIDIA Containers are designed to run GPU-accelerated applications, which demand substantial computational power. These applications, such as deep learning models and scientific simulations, rely on the GPU’s parallel processing capabilities for faster and more efficient computations.

Resource Allocation: When a GPU-accelerated application runs within an NVIDIA Container, it receives a dedicated portion of the GPU’s resources. If the application performs computationally intensive tasks, it will naturally utilize a substantial amount of GPU capacity.

Parallel Processing: NVIDIA GPUs excel at parallel processing, allowing them to handle multiple tasks simultaneously. When running GPU-accelerated applications, the GPU may be fully utilized to maximize performance and deliver quick results.

Optimization: In some cases, GPU usage by NVIDIA Containers is a sign of efficient resource optimization. By fully utilizing the GPU’s capabilities, applications can quickly complete tasks, improving performance.

Multi-Threaded Applications: GPU-accelerated applications are often multi-threaded, allowing them to execute multiple tasks concurrently. This can lead to higher GPU usage as the application effectively utilizes available threads on the GPU.

In conclusion, the high GPU usage by NVIDIA Containers is not a cause for concern; rather, it indicates efficient resource utilization and optimal performance of GPU-accelerated applications.

As these applications continue to push the boundaries of computing, utilizing the full potential of the GPU ensures faster computations and significant advancements in various domains, including artificial intelligence, data science, and scientific research.

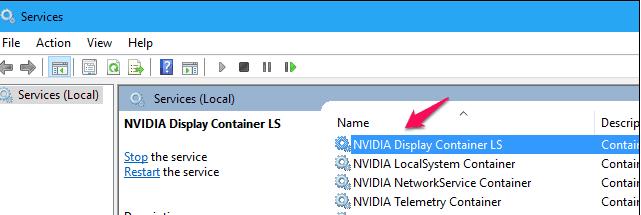

How to Stop NVIDIA Container

NVIDIA Container is crucial in optimizing GPU resource allocation for GPU-accelerated applications. However, there may be instances where you need to stop the NVIDIA Container process. Here’s a step-by-step guide on how to do it:

Open Task Manager: Press Ctrl + Shift + Esc to open the Task Manager. Alternatively, right-click the taskbar and select “Task Manager” from the menu.

Locate NVIDIA Container: In the Task Manager, navigate to the Processes tab. Look for processes labeled NVIDIA Container. These processes are usually named “NVIDIA Container LocalSystem Container” or “NVIDIA Container LocalSystem Container.”

End Task: Right-click on the NVIDIA Container process that you want to stop, and select “End Task”. Confirm the action if prompted.

Check for Background Processes: After ending the NVIDIA Container process, ensure no other background processes are associated with it. Sometimes, multiple NVIDIA Container processes may be running simultaneously.

Disable Automatic Startup: To prevent NVIDIA Containers from automatically starting with your system, you can disable the NVIDIA Telemetry Container and NVIDIA NetworkService Container from starting at boot. You can do this from the Services tab in the Task Manager or the Services application in Windows.

Please note that stopping NVIDIA Container may impact the performance of GPU-accelerated applications. It is advisable to stop the process only when necessary and understand the potential consequences of running GPU-dependent tasks.

If you encounter issues or want to optimize GPU resource allocation, consider adjusting the application settings or resource limits instead of stopping NVIDIA Container altogether.

Understanding NVIDIA Container Runtime Hook

The NVIDIA Container Runtime Hook is a feature integrated into container runtimes like Docker and Kubernetes. It interfaces between the container runtime and the NVIDIA GPU driver.

When a GPU-accelerated container is launched, the runtime hook dynamically links the container to the necessary components of the NVIDIA driver stack.

Enhanced GPU Resource Management:-

The NVIDIA Container Runtime Hook revolutionizes GPU resource management within containers. It ensures that each container receives the appropriate share of GPU resources, preventing resource contention and maximizing the utilization of the GPU’s parallel processing capabilities. This fine-grained control allows multiple GPU-accelerated containers to coexist harmoniously on the same system without compromising performance.

Seamless Integration with Existing Workflows:-

One of the key advantages of the NVIDIA Container Runtime Hook is its seamless integration with existing container workflows. Developers can easily deploy GPU-accelerated applications in containers without complex configurations. The runtime hook abstracts away the complexities of GPU utilization, simplifying the process for developers.

Dynamic GPU Allocation

With the NVIDIA Container Runtime Hook, GPU allocation becomes dynamic and flexible. Containers can request GPU resources on-the-fly, allowing for efficient scaling and resource allocation based on workload demands. This elasticity enhances resource utilization and enables the development of scalable GPU-accelerated applications.

Optimizing Deep Learning and AI Workloads

The NVIDIA Container Runtime Hook is a game-changer for AI and deep learning workloads. It streamlines the deployment of AI models in containers, enabling data scientists to leverage the full power of NVIDIA GPUs for training and inference tasks.

The hook’s efficient resource management leads to faster model training and inference times, accelerating AI research and application development.

In conclusion, the NVIDIA Container Runtime Hook is critical in unleashing GPU-accelerated containers’ full potential.

Its ability to efficiently manage GPU resources, seamless integration with container runtimes, and dynamic allocation capabilities make it a must-have for developers and researchers working on GPU-intensive workloads.

As the demand for GPU-accelerated applications continues to grow, the NVIDIA Container Runtime Hook will remain a driving force in advancing the fields of AI, deep learning, and scientific computing.

📗FAQ’s

What is Nvidia container for?

Nvidia container is a technology that allows users to run GPU-accelerated applications in containers, which are isolated environments that contain everything needed to run the application.

Is it OK to end Nvidia container?

If you end Nvidia container, it will stop all running containers using the Nvidia GPU. Depending on the applications running, this could cause data loss or corruption.

Therefore, it is not recommended to end the Nvidia container without first ensuring all GPU applications have been properly closed.

What happens if you end Nvidia container?

If you end Nvidia container, all running containers that use the Nvidia GPU will be stopped, which could cause data loss or corruption if the applications running in the containers are not properly closed beforehand.

Why is Nvidia container using so much memory?

If many GPU-accelerated applications run in containers, Nvidia containers may use a significant amount of memory. This is because the containers need to keep a copy of the GPU memory allocated for each application.

here’s a table on the reasons why Nvidia container may be using a lot of memory:-

| Reason | Description |

|---|---|

| Running large models | Nvidia containers are often used for running large machine-learning models, which can require a lot of memory. The more complex the model, the more memory it may require. |

| High batch sizes | Batch size is the number of examples processed at once during training. High batch sizes can help increase efficiency but require more memory. |

| Inefficient memory usage | Nvidia containers may not be optimized for memory usage, which can lead to inefficiencies and increased memory consumption. |

| GPU memory usage | The Nvidia container may also use a lot of GPU memory, which can indirectly impact the system memory. |

| Multiple containers | If multiple Nvidia containers are running on the same system, each container may be using a significant amount of memory, which can add up quickly. |

| Memory leaks | Memory leaks can occur when memory is allocated but not released, leading to a gradual increase in memory usage. This can be a problem with the Nvidia container or the software inside it. |

Do I need Nvidia container running?

Whether or not you need Nvidia container running depends on whether you are using any GPU-accelerated applications in containers. If you are not, then you do not need Nvidia container running.

Why is Nvidia container using my disk?

Nvidia container may use disk space for storing container images and logs. This is necessary for the proper functioning of the technology.

What Nvidia services can I disable?

It is not recommended to disable any Nvidia services unless you are sure of the consequences. Disabling the wrong service could cause problems with your GPU and system.

Is Nvidia Docker necessary?

Nvidia Docker is not necessary unless you are running GPU-accelerated applications in containers.

Why is my GPU usage so high when nothing is running?

If your GPU usage is high when no applications are running, it could be due to background processes or system settings. You can check the task manager to identify the processes using GPU resources and adjust settings as needed.

Is it bad to have your GPU maxed?

It is not necessarily bad to have your GPU usage maxed out, but it could cause your GPU to run at a higher temperature, leading to reduced lifespan or stability issues. It is generally recommended to keep GPU usage below 100% if possible.

How do I stop Nvidia container pop-ups?

Nvidia container pop-ups can be disabled by opening the Nvidia Control Panel, going to “Desktop” in the menu bar, and unchecking the “Show Notification Tray Icon” option.

What is the problem with 531.18 driver?

I am not aware of any specific problem with the 531.18 driver. However, drivers can have bugs and compatibility issues with certain hardware or software configurations like any software.

Does increasing RAM decrease GPU usage?

Increasing RAM does not directly decrease GPU usage, but it can improve system performance and reduce the amount of data that needs to be swapped between RAM and disk, which could indirectly reduce GPU usage.

Why is my GPU not using full memory?

If your GPU is not using full memory, it could be due to limitations of the application or system settings. If possible, you can check the application settings and adjust the system settings to allocate more memory to the GPU.

Is it OK to run a PC without a GPU?

If your PC has an integrated graphics processor (iGPU), running it without a dedicated GPU is possible. However, this would limit the performance of graphics-intensive applications and games.

How do I stop Nvidia container from using my microphone?

Nvidia container should not be using your microphone. If you are experiencing this issue, it could be due to a different application or driver. You can check the Windows sound settings to identify the process that is using the microphone and adjust settings as needed.

How do I reduce Nvidia GPU usage?

You can reduce Nvidia GPU usage by closing unnecessary applications, adjusting graphics settings to lower quality or resolution, or limiting the frame rate of games or other applications.

Why is my system taking so much disk?

If your system takes up a lot of disk space, it could be due to many files, temporary files, or system backups. You can use disk cleanup or other tools to identify and remove unnecessary files.

How do I get rid of Nvidia bloatware?

You can uninstall Nvidia bloatware by going to the Control Panel, selecting “Programs and Features,” and uninstalling any Nvidia software you do not need or use.

Is it OK to uninstall Nvidia GeForce?

It is safe to uninstall if you do not use Nvidia GeForce or any other Nvidia software. However, you may need some Nvidia software installed if you use any GPU-accelerated applications or games.

Can you get banned for using Nvidia?

Using Nvidia technology does not violate any rules or regulations, so there is no risk of being banned for using it.

What is a Nvidia Docker?

Nvidia Docker is a tool that allows GPU-accelerated applications to be run in containers, isolated environments containing everything needed to run the application.

Why does everyone use Docker?

Docker is a popular tool for containerization because it simplifies the deployment and management of applications, improves scalability and portability, and reduces conflicts between dependencies.

Why do I need Docker?

You may need Docker if you are deploying and managing applications that have complex dependencies or need to run in multiple environments.

Is using 100% CPU bad?

Using 100% CPU usage for short periods is not bad, but prolonged usage can cause the CPU to overheat and reduce its lifespan. It is generally recommended to keep CPU usage below 80-90% if possible.

How hot is too hot for GPU?

The maximum safe operating temperature for a GPU varies depending on the model, but generally, temperatures above 85-90 degrees Celsius could cause stability issues or reduced lifespan.

What is using up all my GPU?

You can use the task manager or other monitoring tools to identify which processes or applications use your GPU resources.

Who is Nvidia’s biggest competitor?

Nvidia’s biggest competitors in the GPU market are AMD and Intel.

Is the government using Nvidia?

Nvidia technology is used in various industries, including government and defense, but it is not specifically designed for or targeted at any particular sector.

Does Nvidia Experience spy on you?

Nvidia Experience does collect data on system hardware and software configurations, but this is to optimize game settings and improve the user experience. The data is not shared with third parties.

Is 70 degrees too hot for a GPU?

A temperature of 70 degrees Celsius is within the safe operating range for most GPUs, but higher temperatures could cause stability issues or reduced lifespan.

What is the normal temperature for GPU while gaming?

The normal temperature for a GPU while gaming depends on the model and the load level, but generally, temperatures between 60-80 degrees Celsius are considered normal.

What is the ideal GPU usage?

The ideal GPU usage depends on the application or game being run, but generally, it is recommended to keep GPU usage below 100% to avoid overheating or stability issues.

How do I purge all Nvidia packages?

You can uninstall all Nvidia packages by going to the Control Panel, selecting “Programs and Features,” and uninstalling all Nvidia software.

What is Nvidia Reflex?

Nvidia Reflex is a technology that reduces input lag in video games by Optimizing the rendering pipeline and reducing the time between mouse clicks and on-screen action.

Why did GeForce driver fail?

GeForce driver failures can occur for various reasons, including compatibility issues with the hardware or software, outdated drivers, or hardware failures.

Why do drivers get corrupted?

Drivers can become corrupted for various reasons, such as improper installation, malware infection, hardware issues, or software conflicts.

Is driver 511.23 good?

The quality of a driver depends on the hardware and software configurations and the intended use. Generally, it is recommended to install the latest driver version available for the specific hardware and software.

How much FPS does 16GB RAM give?

The amount of FPS depends on the specific hardware and software configurations and the game or application being run. RAM capacity is not the sole factor that determines FPS.

How to clear GPU cache?

GPU cache can be cleared by restarting the system, updating the graphics driver, or using specific software utilities.

How much memory is best for GPU?

The amount of memory required for a GPU depends on the specific use case and the size of the data being processed. Generally, having at least 4GB of VRAM for gaming and 8GB or more for professional applications is recommended.

How to maximize GPU memory?

GPU memory can be maximized by optimizing application settings, reducing memory usage in other processes, and upgrading to a GPU with more memory.

Can you build a PC and add a graphics card later?

Yes, it is possible to build a PC and add a graphics card later as long as the motherboard has an available PCIe slot and the power supply can support the additional power requirements.

Are GPUs and graphics cards the same thing?

GPUs and graphics cards are not the same thing. A GPU is a processing unit responsible for graphics processing, while a graphics card is a hardware component that contains the GPU and additional components such as VRAM and cooling.

What is the difference between a video card and a graphics card?

There is no significant difference between video card and graphics card. Both terms refer to a hardware component responsible for processing and rendering visual data.

Should I turn off Nvidia container?

You should only turn off Nvidia container if you are not using GPU-accelerated applications in containers. If you are, turning off Nvidia container will stop all running containers, which could cause data loss or corruption.

Why is Nvidia container using so much of my GPU?

Nvidia container uses GPU resources to accelerate hardware for applications running in containers. If many containers or resource-intensive applications run, this could cause high GPU usage.

Is 100% GPU usage bad?

Using 100% GPU usage for short periods is not bad, but prolonged usage can cause the GPU to overheat and reduce its lifespan. It is generally recommended to keep GPU usage below 100% if possible.

Why is desktop windows manager using so much GPU?

Desktop Windows Manager (DWM) renders visual effects and other graphical elements in Windows. If many open windows or resource-intensive applications run, this could cause high GPU usage.

How do I manage my Nvidia GPU performance?

You can manage Nvidia GPU performance by adjusting application settings, updating the graphics driver, and monitoring GPU usage and temperature.

What happens if I disable Nvidia?

Disabling Nvidia could cause GPU-accelerated applications to stop working properly or not work at all. If you need to disable Nvidia for any reason, it is recommended to make sure that all GPU-accelerated applications are closed first.

Is Nvidia GeForce Experience bloatware?

Nvidia GeForce Experience is a software that provides additional features and optimization for gaming and other GPU-accelerated applications. Some users may consider it bloatware if they do not use or need these features, but it is not harmful or malicious in any way.

Conclusion

In conclusion, NVIDIA Containers represent a significant stride in addressing the challenge of effectively deploying GPU-accelerated applications.

Through their seamless integration with hardware and software components, they enable developers and researchers to fully leverage the power of their GPU resources, thereby accelerating the development and deployment of innovative solutions in AI and deep learning.

Navigating the complex landscape of AI and deep learning requires tools that are not just robust and efficient but also versatile. NVIDIA Containers fit the bill, transforming how we approach and work in these fields.

As we continue to push the boundaries of what’s possible, technologies like NVIDIA Containers will light the way, enabling us to reach new heights of innovation.

Whether you’re a seasoned AI researcher, a deep learning engineer, or someone interested in the intersection of technology and innovation, understanding and utilizing NVIDIA Containers is a valuable addition to your skillset.

As we look towards a future increasingly driven by AI, tools like NVIDIA Containers will continue to play a pivotal role in shaping this exciting landscape.

Remember, success in AI and deep learning isn’t just about having powerful hardware but about fully leveraging it. NVIDIA Containers provide the platform to do exactly that.

Join us in embracing this exciting technology, and let’s shape the future of AI together.